Introduction

Hi there! This website contains tutorials, how-to guides, explanations, and reference documentation of the things we do at Tech Start UCalgary.

If you are looking for our main page, please visit us at techstartucalgary.com.

Demo & Pitch Nights

All Tech Start UCalgary projects are showcased to the world at the end of each academic year. At this event, people from different industries and backgrounds come and give us feedback on the projects.

This is also a great opportunity for you as a professional and us as a club to connect and network with different companies.

It's important to remember that as an entrepreneurial club, you will also be pitching your ideas to potential investors, so your hard work on the business strategy will be on display here. Pitching your start-up in a convincing manner is a critical skill, so we also give you the opportunity to practice this in the demos leading up to the final showcase.

Dates to Remember

More dates to be added soon!

| Date | Event | Audience | Location |

|---|---|---|---|

| 20 November 2025 | Elevator Pitch | Tech Start | ENG 207 |

Elevator Pitch

20 November 2025

Quick Info

Objectives

Let everyone know what your product idea is, present progress so far and next steps.

This is your first opportunity to get feedback from industry leaders and lets the executive and project support teams know how far along you are, and what your vision is as to best provide the support and resources you need.

There will be more pitches and demos to come, so consider this to be a good warmup where you can get some valuable feedback early in the process!

Start Time

6 PM sharp

Time Limit

Hard cutoff at 5 minutes

Then 5 minutes for Q&A

What to do

Clearly state what exactly you're doing, what problem you're solving (if applicable), and how you plan to approach it.

Make it engaging and interesting, leave the audience with something memorable.

In your slide deck, avoid walls of text at all cost, use visuals instead (e.g. user workflows, roadmap/timeline, images relevant to the topic being discussed).

We recommend not having more than 3 people presenting as this really messes with your presentation's flow as you hand off to them.

Speak loudly and clearly so everyone can hear you! Use the LAV mic if possible, and learn how to use it correctly. This video may help. Whatever you do, do not speak into it directly, you will make very loud and annoying popping sounds.

Rehearse your pitch and keep it under the time limit.

What not to do

- Don't get bogged down by the details

- Avoid overwhelming your audience with excessive details, stick to the big picture, what your idea is and why it matters

- Don't assume prior knowledge

- Explain your concept as if you’re presenting to someone hearing about this problem for the first time, keep it simple and intuitive

- Don’t get too technical

- Assume your audience does not have a thorough programming or engineering background, skip the jargon, acronyms and in-depth explanations of the technical processes that are behind how the product works (unless asked about it).

- Don't forget the why

- It’s not just about what your idea does, it's about why it matters, who it benefits and what problem it solves

- Don’t go over time

- Going overtime can make your pitch feel less focused and polished compared to other teams. Staying within the time limit helps you deliver your key points clearly and confidently, which will lead to stronger feedback from the judges — and make a better impression on potential investors down the road.

- Lose your enthusiasm

- If you sound uncertain or uninterested, your audience will be too, show passion and belief in your idea!

Suggested talking points

These are just suggestions and not at all mandatory, but may help get you started.

The Problem

- Fron a business strategy perspective think about your problem statement

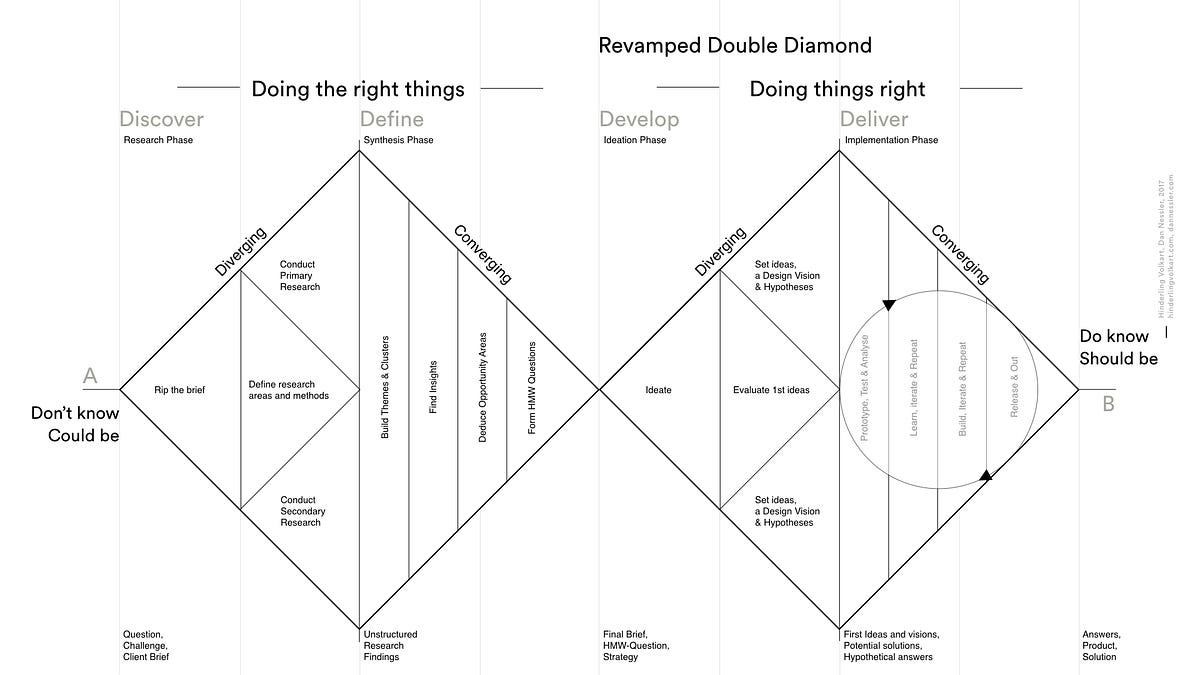

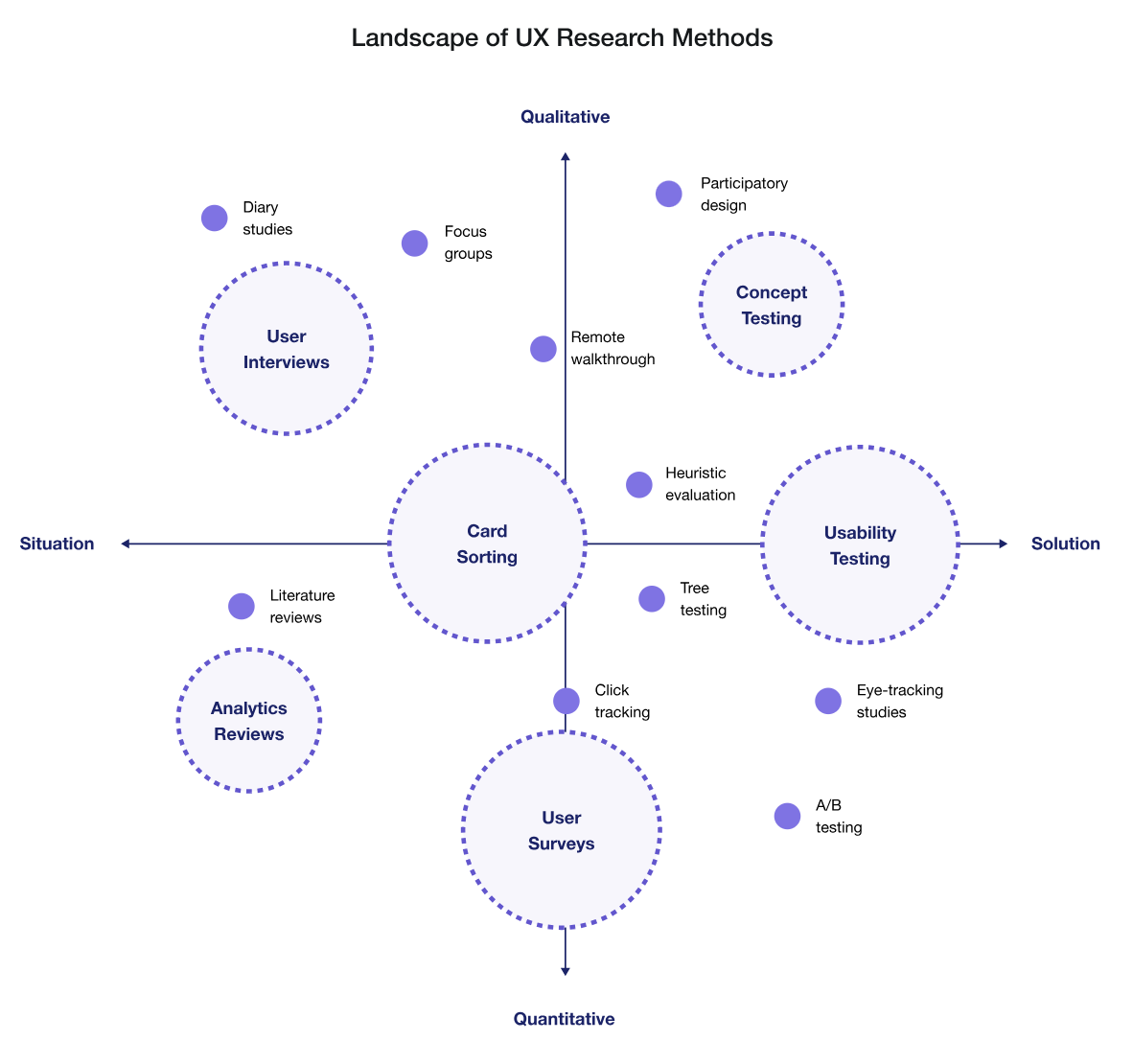

- From a design standpoint look at your findings from affinity and empathy mapping (Slides 11-21 with FigJam Template)

The Solution

- From a business strategy perspective try to hit these points.

- Think about your HMW statement (Slide 23 from the Nov. 6 Design Workshop) and look at this FigJam example (Slide 26 from the Nov. 6 Design Workshop, and think about how you could integrate them into the explanation of your solution.

The Target Audience

-

From a business strategy perspective look at these resources:

-

From a design perspective look at these resources:

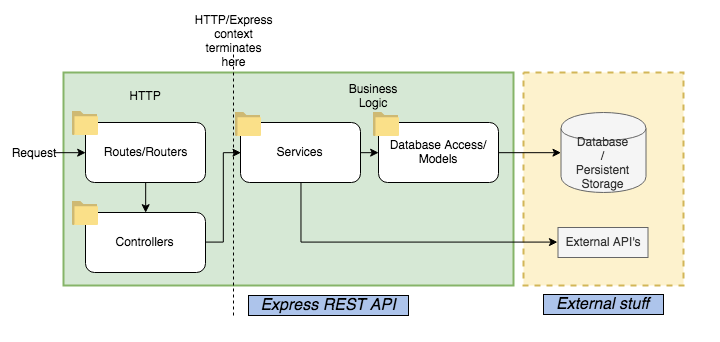

Tech Stack

- If you choose to speak on the software development side of this project in this early pitch, keep it to just the tech stack (the languages, services and frameworks you'll be using and how they play a role in making your product work), do NOT go into the minutia of your software architecture.

Progress made so far and next steps

- From a business strategy standpoint, discuss traction

- Add a timeline or a roadmap to your presentation! Look into FigJam Milestones, Roadmap, & WorkFlow, Slides 30-32

Interim Showcase

12 February 2026

The Interim Showcase is your second opportunity to clearly communicate what you’re building, why it matters, and why your solution stands out to industry guests, and for the first time this semester, the general public. In a concise 7-minute pitch, we’re looking for a strong demo, a clear problem and target audience, an explanation of how your solution works, and confidence in both the technical and business aspects. By the end, the audience should understand your product’s vision, value, and potential impact.

Time

6PM - 8PM

Location

ICT 114

Time per team

Hard cutoff at 15mins

Target 7 minutes for your presentation, 3 minutes for Q&A (10 minutes total - remember that your pitch going for much longer than the other teams can make it feel less focused and polished in comparison.)

Dress code

Business casual, try to coordinate with your team for a professional cohesive look

Key Elements

Demonstration (Video or Live Encouraged)

- Provide a compelling demonstration of the product through a pre-recorded video or a live walkthrough to showcase its functionality.

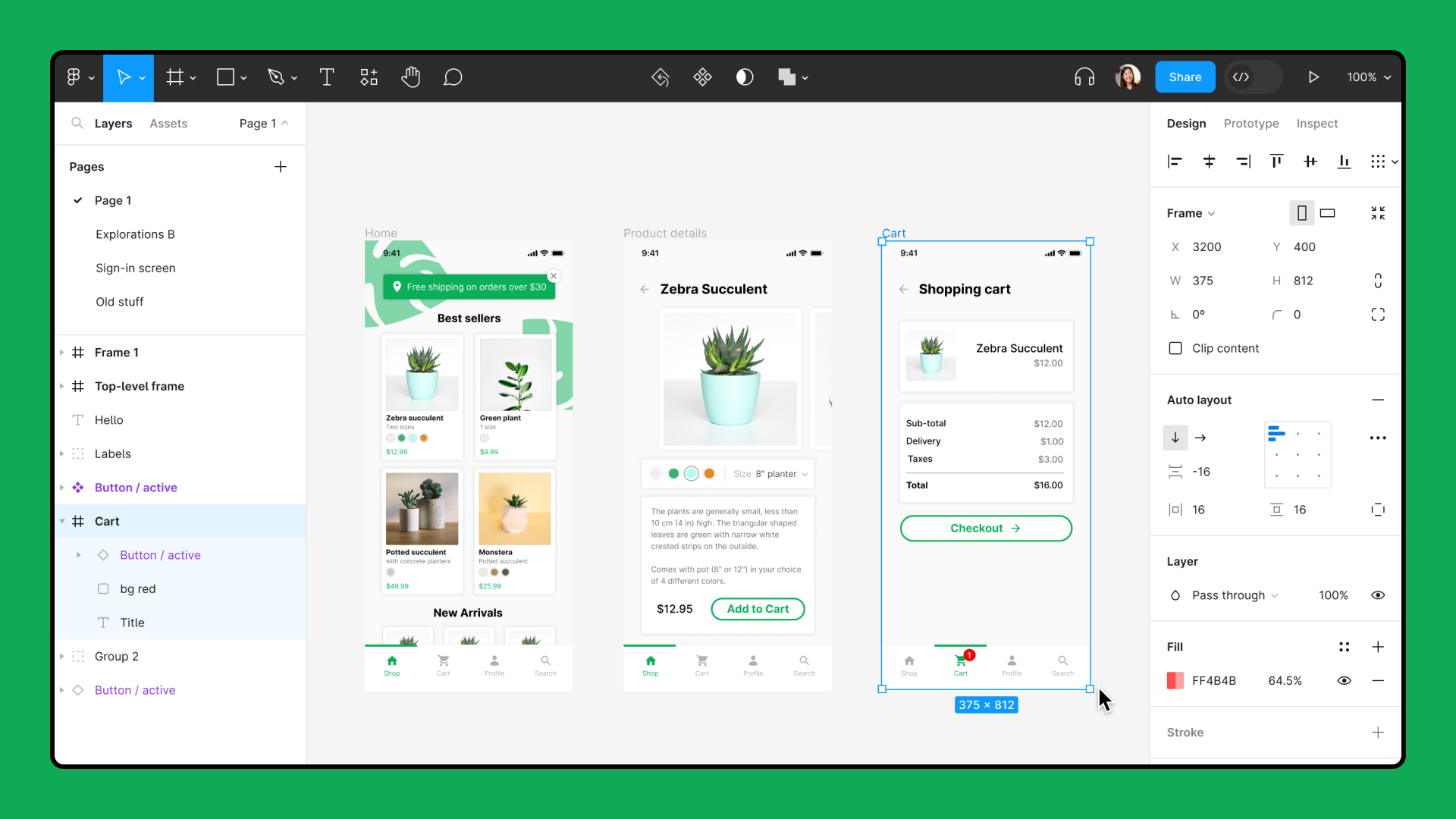

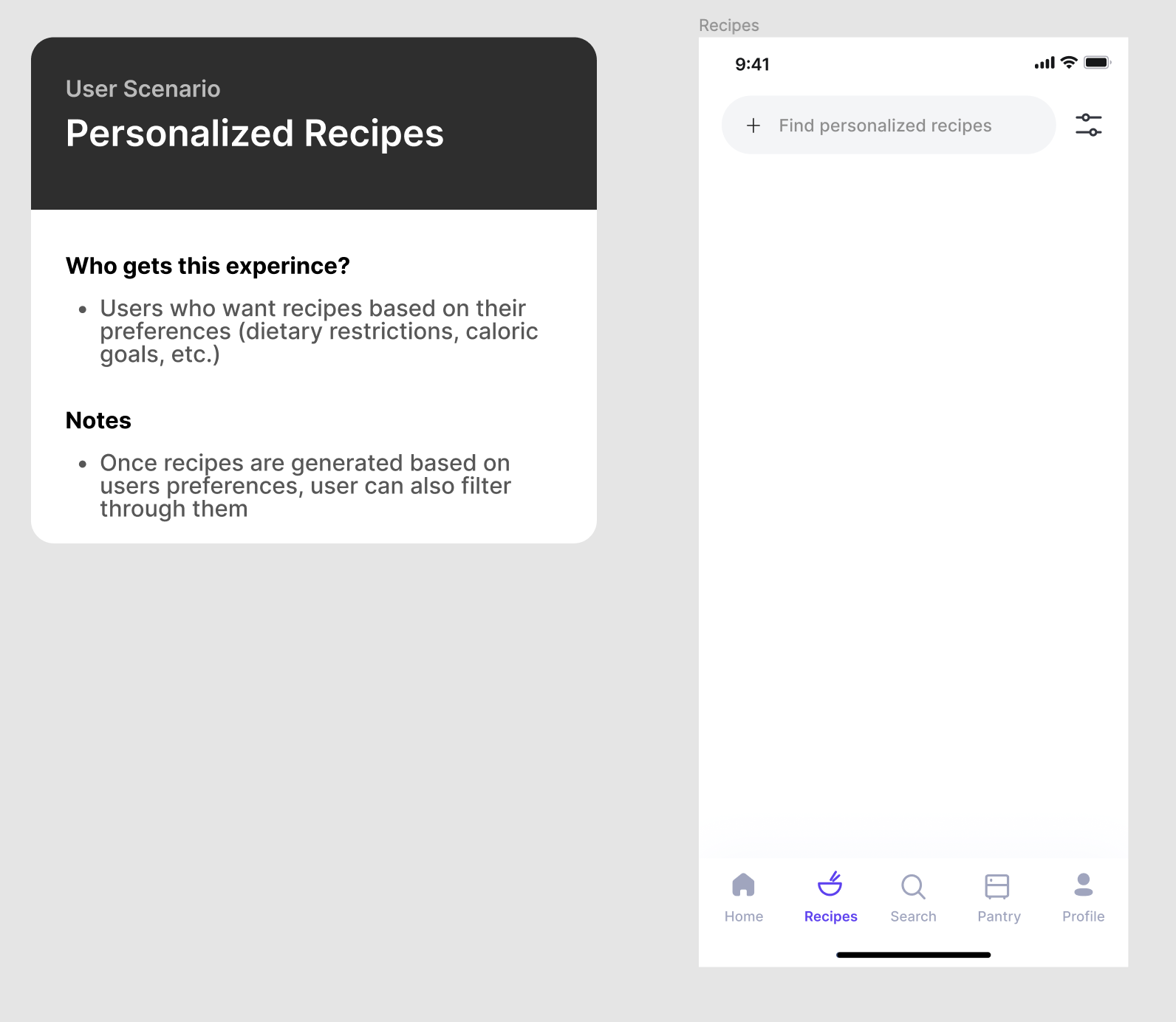

- If you are not in a state where a demo is possible / all the features can’t be shown feel free to add to the Figma Designs to your slides.

Brand Identity (Final Name & Logo)

- Present the finalized product name, slogan and logo to establish a strong brand presence.

- Doesn’t need to be a formal slide but should be apparent on the slide deck/ introduction.

Technical Knowledge (No Code Submission, But Understand the Codebase)

- While no code submission is required, be prepared to explain the architecture, technology stack, and key technical decisions.

Problem Statement (Why This Matters & Target Audience)

- Clearly define the problem your product solves, why it’s important, and who the primary users are.

Solution

- How you are solving the problem and why people would be willing to pay for your solution

- If you’re introducing a new or unique workflow or technology, talk about it and briefly discuss how it's implemented.

Go-To-Market Strategy & Roadmap

- Give some indication of how large the target market is (Could do TAM, SAM, SOM market sizing)

- Outline how you plan to attract users, the development timeline, key milestones, and when revenue generation will begin.

Revenue Model (How You Make Money)

- Explain the business model, pricing strategy, and revenue streams.

Competitive Analysis (Market Landscape & Why You’re Better)

- Compare your solution to existing competitors, highlighting your unique advantages through a competitive matrix.

(Optional) Team Composition & Skills

- Provide an overview of your team, their expertise, and how their skills contribute to the success of the project.

Example Pitch Presentation

Keys to Success

- Understand your audience:

- What might their backgrounds be? Are there any jargon I am using?

- What do they know about my project? What message/impact do I hope to leave them with?

- Complete your slide deck

- Practice your 7 minute pitch -- and make sure to consider the length of your demo video (if you choose to have one)

- Complete your demo video

- Have your tech stack and architecture solidified

Tips

- Have 2-3 designated presenters for your team

- No need to show/explain code live, but be ready to answer questions about your tech stack, and how you implemented specific features of your project.

Important Note

- Please send your final presentations to the presidents via discord by February 11 at noon.

Final Showcase

NOTE: THIS IS FROM LAST YEAR - ONLY USE AS A REFERENCE FOR WHAT THE EVENT WILL LOOK LIKE => WILL UPDATE WITH NEW DETAILS AS WE GET CLOSER TO THE DATE

Objectives

Share your hard work over the last year to the club, our sponsors, and industry guests.

Get invaluable feedback on your project to guide your further development beyond TechStart.

Quick Info

Venue

Collision Space, Hunter Hub for Entrepreneurial Thinking

Hunter Student Commons Room 401

Date - 3 May 2025

Time - 12:00pm to 4:00pm

Project teams, please arrive no later than 11:30am

Setup begins at 10:00am, so you're welcome to enter the venue from then on

Rubric

Judge Rubric _ Showcase 2025 - Rubric.pdf

Sample Presentation (2024 - CampusBuddy)

Final Showcase- CampusBuddy2024.pdf

Schedule

| 12:00pm | - | 12:30pm | Light Networking & Snacks |

| 12:30pm | - | 12:45pm | Introductions |

| 12:45pm | - | 1:45pm | Project Presentations (1-3) |

| 1:45pm | - | 2:00pm | Intermission |

| 2:00pm | - | 2:45pm | Project Presentations (4-5) |

| 2:45pm | - | 3:30pm | Judging Break & Bingo |

| 3:30pm | - | 4:00pm | Feedback & Awards |

| 4:00pm | - | 4:30pm | Closing Remarks |

Presentation Order

- CONN-X

- Event Ease

- Agrivanna

- Earth's Echo

- Callia

Presentation Time Limit

15 minutes for presentation (hard limit)

We will have a timer clearly visible for you

5 minutes for questions

We will also be allowing 3 mins for each team during the judging break to let the judges play with their product.

Presentation Logistics

There are 3 microphones: 1 clip-on, 1 hand-held, and 1 on the podium. If you're presenting it's a good idea to be familiar with how to use these to make your presentation go more smoothly.

MacBooks with Apple Silicon chips have had issues with the projector system, so bring Intel MacBooks or Windows/Linux devices.

We will have USB-C to HDMI adapters if you need, but we suggest having your own prepared and testing it out before the presentations start.

Dress Code: Business Casual or Business

Rubric

There are no predefined milestones or goals for the final showcase, as every team has their own specific goals.

Our judges will be using the rubric below to give feedback, as well as general feedback to each team.

They will also choose winners for the following categories: Best Overall, Best Design, and Best Technology.

Note, one team can win more than one award.

Installfest: From zero to hero

Introduction

We understand that setting up your computer for development can be a daunting task. There are so many tools and libraries that you need to install, and it's hard to know where to start. That's why we created this guide to help you get started with your development environment.

This guide will help you install all the tools you need to start coding. It will also help you configure your computer so that you can easily switch between different versions of Node.js and Python. Your don't need to install everything in this guide, only the tools you need for your project. If you are not sure what tools you need, ask your project lead or one of the exec members.

Table of Contents

- Installfest: From zero to hero - Introduction - Table of Contents - Windows Users - Installing Homebrew - Installing VSCode: MacOS - Installing VSCode: Windows WSFL2 - Installing Git - Installing bash-completion - Installing Node - Installing Nvm - Installing Python - Installing Pip - Installing Pyenv - Downloading Xcode - That's it!

Windows Users

If you are using Windows, we recommend that you install WSL2 and Ubuntu as your development environment. You can find instructions on how to do so here: WSL2 Installation Once you have installed WSL2 and Ubuntu, you can continue with this guide.

Installing Homebrew

Homebrew is a package manager for macOS (or Linux) that allows you to easily install and manage software packages and libraries. It simplifies the installation process by automating the installation of dependencies and providing a consistent interface for installing software. To install Homebrew, run the following command:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

If you are using Windows, run the following commands:

test -d ~/.linuxbrew && eval "$(~/.linuxbrew/bin/brew shellenv)"

test -d /home/linuxbrew/.linuxbrew && eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"

test -r ~/.bash_profile && echo "eval \"\$($(brew --prefix)/bin/brew shellenv)\"" >> ~/.bash_profile

echo "eval \"\$($(brew --prefix)/bin/brew shellenv)\"" >> ~/.profile

To check if Homebrew is installed, run the following command:

brew --version

It should return something like this:

Homebrew 3.2.0

Homebrew/homebrew-core (git revision 3b6; last commit 2021-07-26)

Installing VSCode: MacOS

Visual Studio Code is a free source-code editor made by Microsoft for Windows, Linux and macOS. Features include support for debugging, syntax highlighting, intelligent code completion, snippets, code refactoring, and embedded Git. To install VSCode, run the following command:

brew install --cask visual-studio-code

To check if VSCode is installed, run the following command:

code --version

Installing VSCode: Windows WSFL2

To install VSCode, download it from the following link: VSCode Installer Once downloaded, open VScode in Windows.

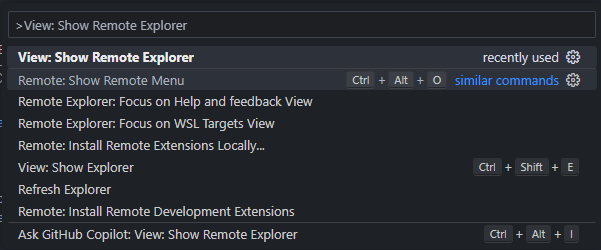

Open the remote explorer by pressing Ctrl+Shift+P and typing View: Show Remote Explorer and selecting WSL Targets.

Then select Ubuntu 20.04 or the version of Ubuntu you installed. This will cause some reinstalling to happen, but once it's done, you will be able to use VSCode with Ubuntu.

To check if WSL2 is connected with VSCode, go to the WSFL2 terminal and run the following command:

code .

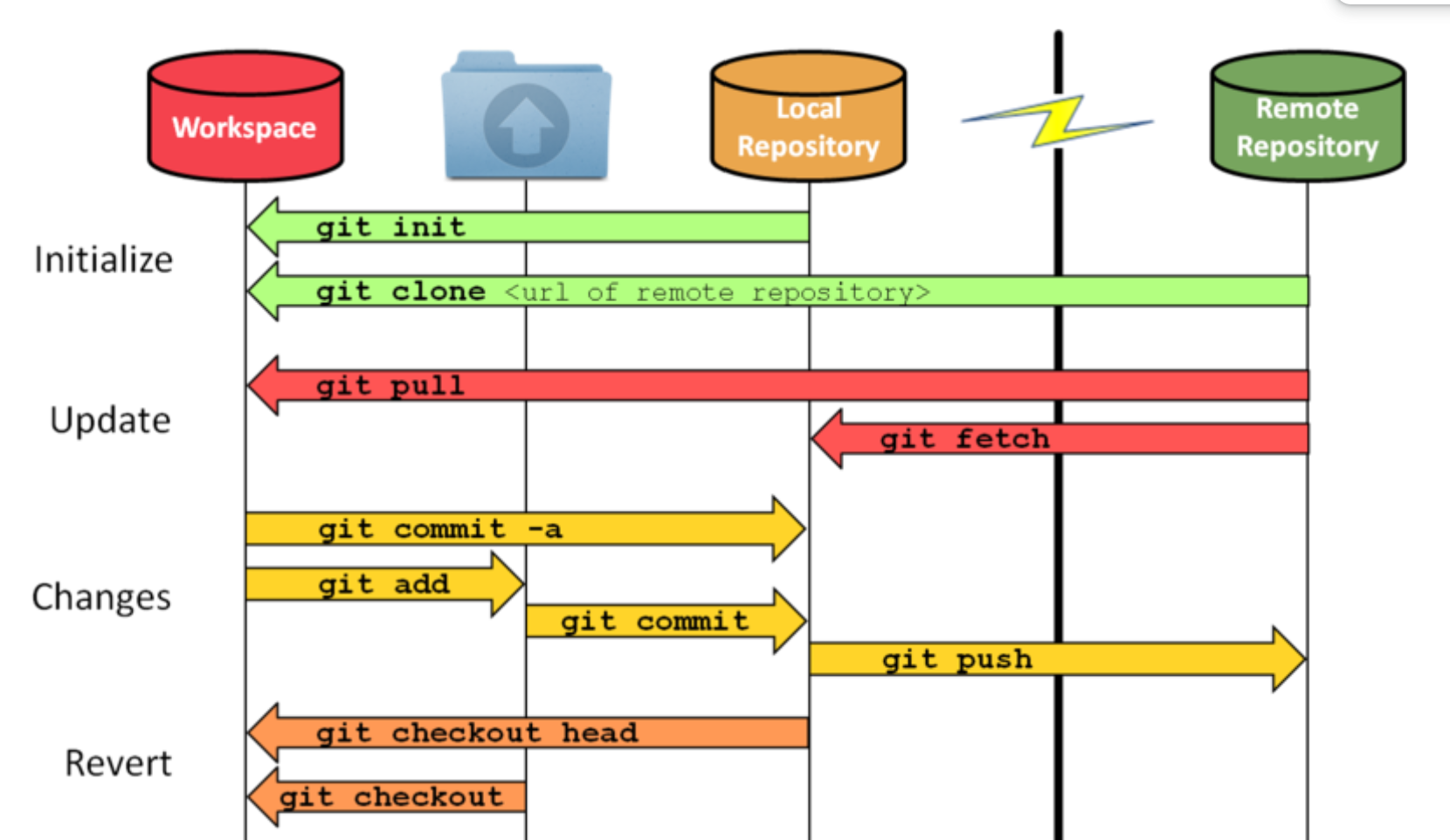

Installing Git

Git is a free and open-source distributed version control system designed to handle everything from small to very large projects with speed and efficiency. It is the most widely used modern version control system in the world today. To start using Git, you need to run the following command:

brew install git

To check if Git is installed, run the following command:

git --version

To configure Git, run the following commands:

git config --global user.name "YOUR_NAME"

git config --global user.email "YOUR_EMAIL"

Then donwload the github cli:

brew install gh

and authenticate with your github account:

gh auth login

to check if it worked:

gh auth status

Installing bash-completion

bash-completion is a collection of shell functions that take advantage of the programmable completion feature of bash. It provides completions for various commands, including git, npm, and others. To install bash-completion, run the following command:

brew install bash-completion

To configure bash-completion, run the following command:

code ~/.bash_profile

And add the following lines at the end of the file and save it:

if [ -f $(brew --prefix)/etc/bash_completion ]; then

. $(brew --prefix)/etc/bash_completion

fi

Installing Node

Node.js is an open-source, cross-platform, back-end JavaScript runtime environment that runs on the V8 engine and executes JavaScript code outside a web browser. It allows developers to use JavaScript to write command-line tools and for server-side scripting—running scripts server-side to produce dynamic web page content before the page is sent to the user's web browser. To install Node, run the following command:

brew install node

To check if Node is installed, run the following command:

node --version

Installing Nvm

nvm stands for Node Version Manager. It is a tool that allows you to easily install and manage multiple versions of Node.js on a single machine. This is useful for developers who need to work with different versions of Node.js for different projects. To install nvm, run the following command:

brew install nvm

To configure nvm, run the following command:

mkdir ~/.nvm

Then open your ~/.bash_profile using:

code ~/.bash_profile

And at the following lines at the end of the file and save it:

export NVM_DIR=~/.nvm

source $(brew --prefix nvm)/nvm.sh

Close and reopen your terminal. To check if nvm is installed, run the following command:

nvm --version

To change versions of node, run the following command:

nvm install <version>

for example:

nvm install 14.17.6

To see which version are available, run the following command:

nvm ls-remote

make sure you team is using the same version of node for consistency and to avoid errors.

Installing Python

Python is an interpreted, high-level, general-purpose programming language. Created by Guido van Rossum and first released in 1991, Python's design philosophy emphasizes code readability with its notable use of significant whitespace. To install Python, run the following command:

brew install python

By default, Homebrew installs Python 3.x. To check if Python is installed, run the following command:

python3 --version

Installing Pip

pip is a package management system used to install and manage software packages written in Python. Many packages can be found in the Python Package Index (PyPI).

brew install pip

To check if pip is installed, run the following command:

pip --version

Installing Pyenv

pyenv is a simple yet powerful tool that allows you to easily install and manage multiple versions of Python on a single machine. This is useful for developers who need to work with different versions of Python for different projects. Using pyenv will also allow you to group your project dependencies into a single virtual environments, which will make dependency management much easier. To install pyenv, run the following command:

brew install pyenv

To check if pyenv is installed, run the following command:

pyenv --version

pyenv will be useful for those who are working with Django or Flask.

Downloading Xcode

Recommended for those who are working with React Native. Xcode is an integrated development environment (IDE) for macOS containing a suite of software development tools developed by Apple for developing software for macOS, iOS, iPadOS, watchOS, and tvOS. To download Xcode, go to the App Store and search for Xcode.

That's it!

Now that you have installed all the tools you need, you are ready to start coding like a PRO. If you have any questions, feel free to ask your project lead or one of the exec members.

WSL2 Installation

Introduction

WSL2 is a new version of the Windows Subsystem for Linux that is built on top of Hyper-V. It provides a full Linux kernel and userspace environment that runs natively on Windows. This means that you can run Linux applications on Windows without having to install a virtual machine or dual boot your computer. WSL2 is faster than WSL1 because it uses Hyper-V instead of VirtualBox. It also has better support for Docker and other container technologies.

Table of Contents

Installing WSL2

To install WSL2, you need to have Windows 10 version 2004 or higher. To check your Windows version, open your Powershell and run the following command:

winver

If you have an older version of Windows, you can upgrade to the latest version by going to Settings > Update & Security > Windows Update > Check for updates.

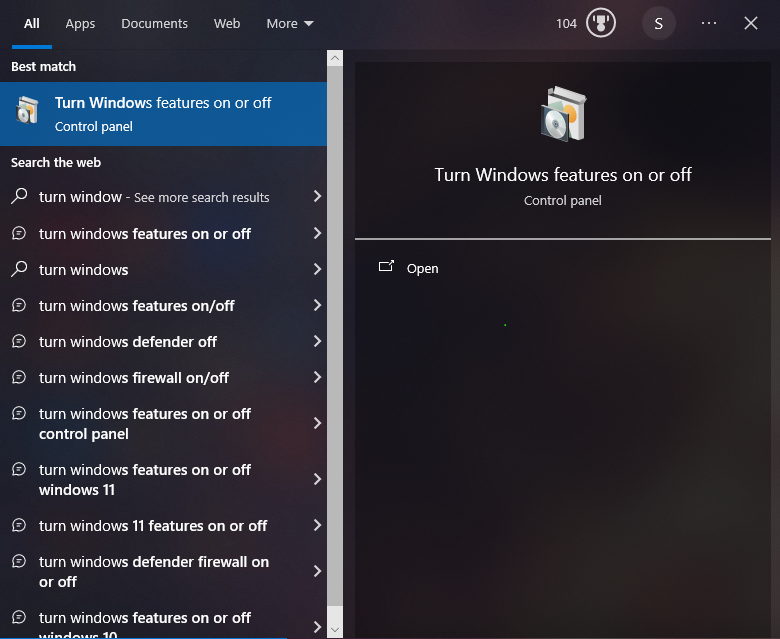

Then search for "Turn Windows features on or off" in the start menu and open it.

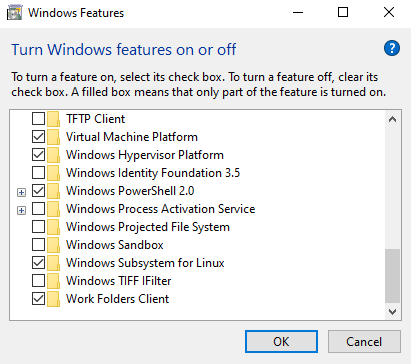

Then check the box next to "Windows Subsystem for Linux" and "Windows Hypervisor Platform" and click OK. You will be prompted to restart your computer.

Installing Ubuntu

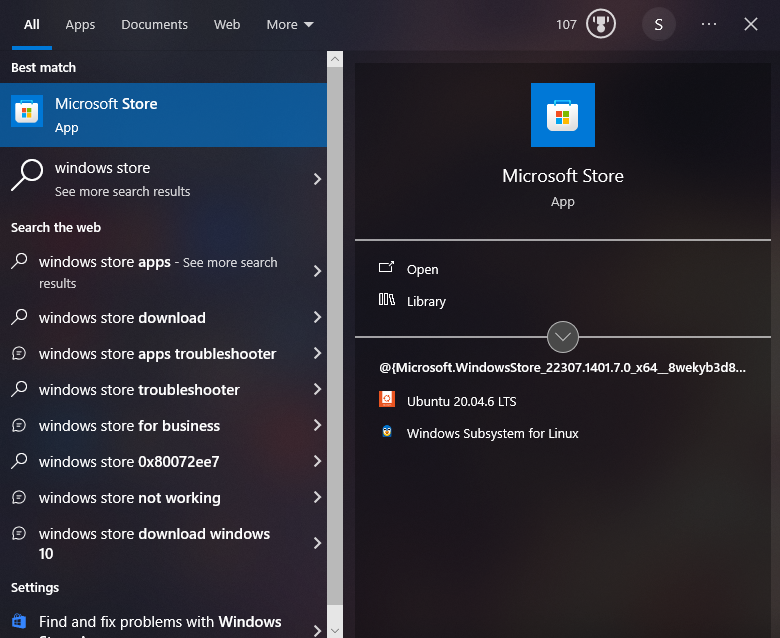

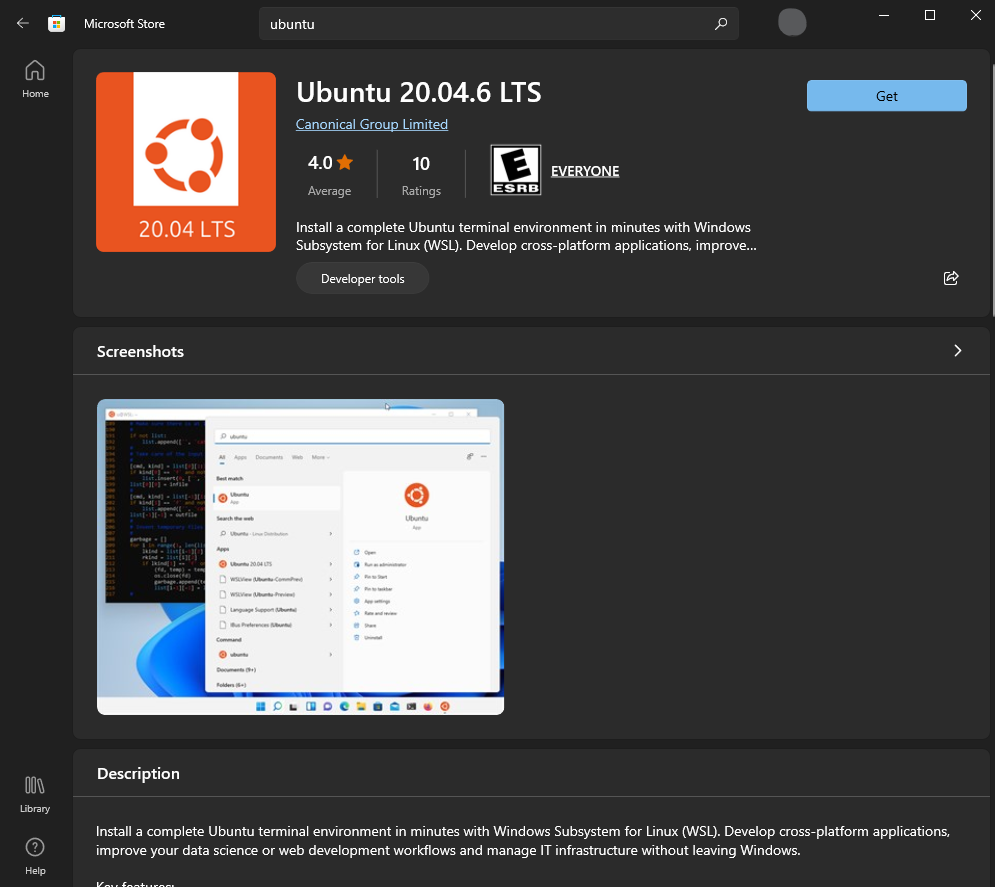

To install Ubuntu, go to the Microsoft Store and search for "Ubuntu". Then click on "Ubuntu 20.04 LTS" and click "Get".

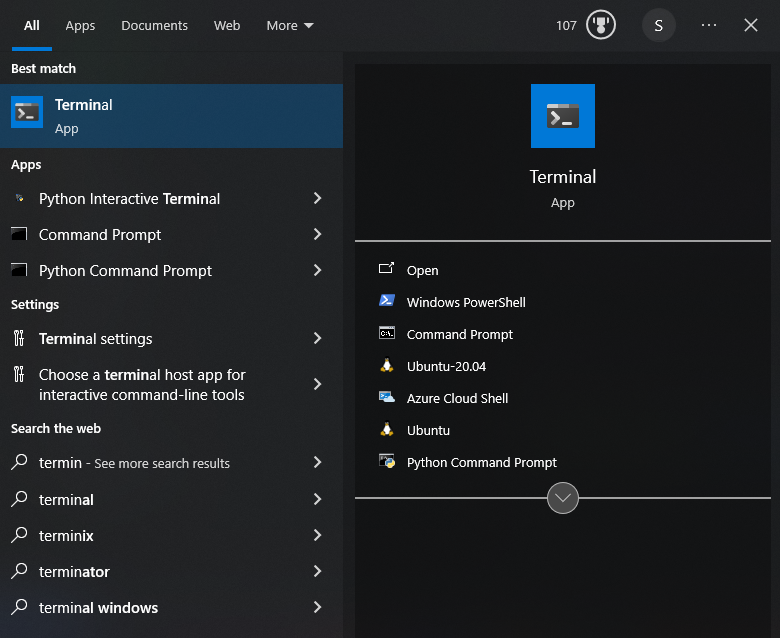

Once installed, you can launch Ubuntu by opening the terminal from the start menu.

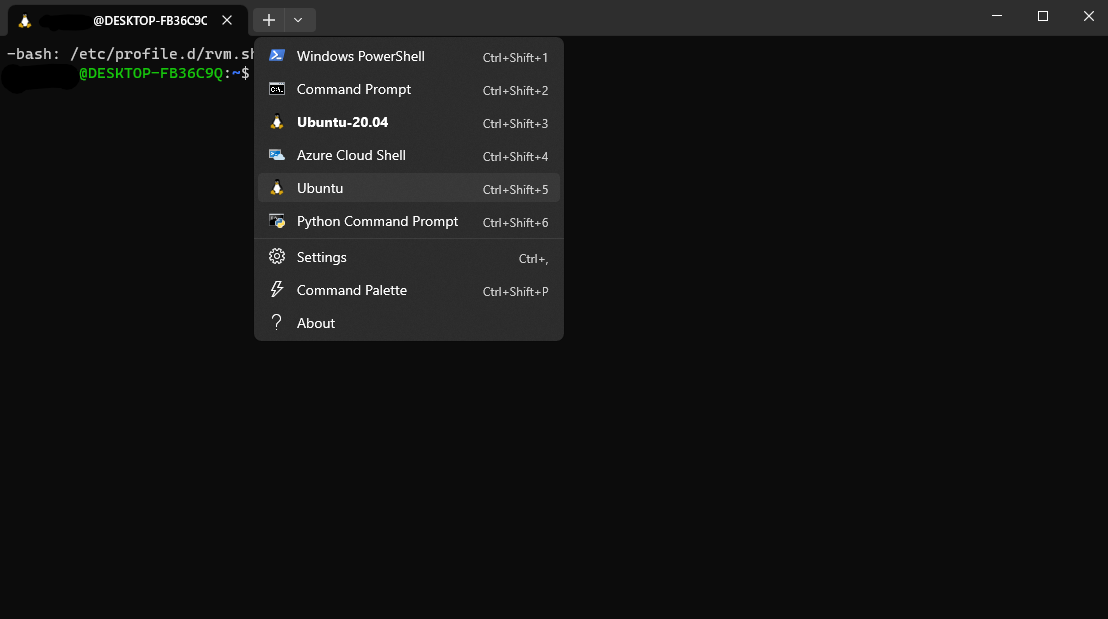

to switch to Ubuntu, click on the Ubuntu icon in the top left corner of the terminal window as shown below:

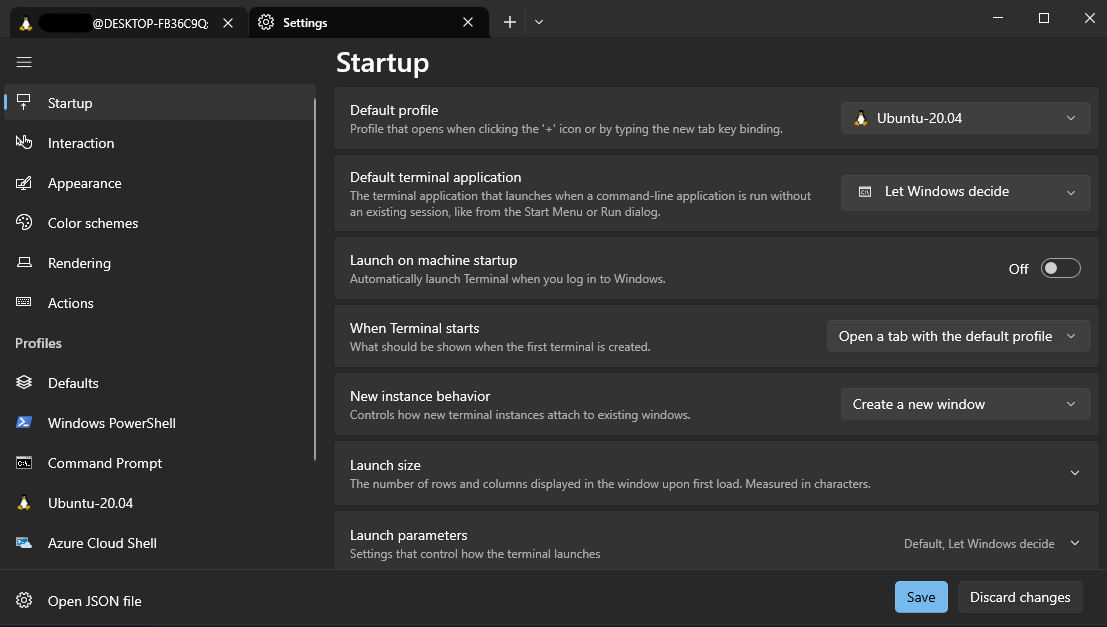

You can configure a default Ubuntu terminal by going to Settings > Terminal > Default profile > Ubuntu.

That's it!

You have successfully installed WSL2 and Ubuntu. Now you can start using Linux applications on Windows without having to install a virtual machine or dual boot your computer. For your project we recommend using WSFL2 and Ubuntu as your development environment. If you are done working, you can close the terminal window and it will automatically shut down the Ubuntu environment. Continue the installfest here: Installfest

I don't know how to use Linux, help!

If you are not familiar with Linux, we recommend that you take a look at the following resources:

At minimum, you should learn the following commands:

pwdlscdmkdirtouchrmmvcpcatsudo

Motivation

Guess what most of the top Software Developers have in common?

They all have cool projects!

torvalds, dtolnay, sindresorhus, donnemartin, kamranahmedse, jwasham, trekhleb, tiangolo, gre, vmihailenco, twpayne, brycedrennan, marten-seemann, rusty1s, kripken, krisnova.

And those projects are successful:

Tech Start UCalgary mission is to foster an environment where people can become a better version of themselves through creating software projects.

This website will guide you through the elements that make a project awesome while emphasizing industry best practices.

A good README.md

A README.md file in the root of the repository

is going to be the first things your users see.

GitHub renders this file on the main page of the repository.

This is an example from the NixOS organization:

It's important that it's professional and colorful, and that it grabs the user's attention.

I highly recommend the following elements are present on the first page:

-

Logo and name: A good logo is going to make the project look respected and professional.

A good name is able to encapsulate the project's purpose, or, at the very least, should give the project an identity.

This is an example from the Prettier project:

It looks good.

On the other hand, it encapsulates the purpose of the project: "Making your code prettier".

-

Slogan: A short, catchy phrase, summarizing what the project does and its most hot features.

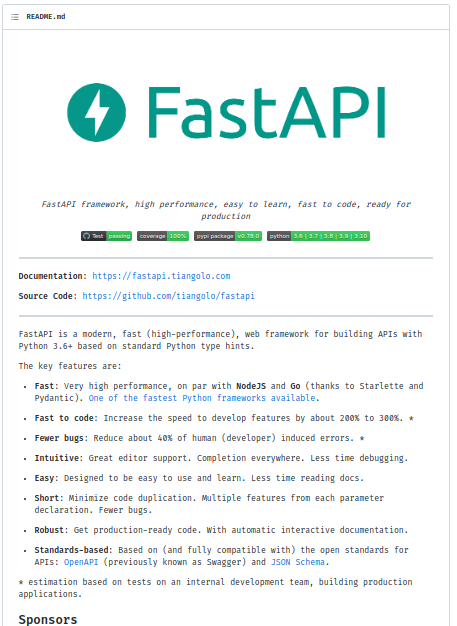

This is an example from the FastAPI project:

FastAPI framework, high performance, easy to learn, fast to code, ready for production.

Wow. Yes, I want high-performance APIs. I want things easy to learn, with no boilerplate, and robust enough for production.

While short, this slogan is very important because it's displayed on the project card:

It can be the first thing a new visitor sees and therefore can be the decisive factor for a user to click on it.

It's also displayed on the top of the project home page:

And sets the expectations for what comes next, it needs to be good.

-

Description: Here you will have a few paragraphs to explain what your project does, what problem it solves for your users, and why should a user be using it right now.

This is an example from the FastAPI project:

FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.6+ based on standard Python type hints.

The key features are:

-

Fast: Very high performance, on par with NodeJS and Go (thanks to Starlette and Pydantic). One of the fastest Python frameworks available.

-

Fast to code: Increase the speed to develop features by about 200% to 300%. *

-

Fewer bugs: Reduce about 40% of human (developer) induced errors. *

-

Intuitive: Great editor support. Completion everywhere. Less time debugging.

-

Easy: Designed to be easy to use and learn. Less time reading docs.

-

Short: Minimize code duplication. Multiple features from each parameter declaration. Fewer bugs.

-

Robust: Get production-ready code. With automatic interactive documentation.

-

Standards-based: Based on (and fully compatible with) the open standards for APIs: OpenAPI (previously known as Swagger) and JSON Schema.

* estimation based on tests on an internal development team, building production applications.

Here the user knows that FastAPI helps build APIs with Python. They should be using FastAPI right now because it's fast, intuitive, easy, and so on.

It's always a good idea to throw a few power-words like: "Fast", "Powerful", "Secure", and "Reliable", but of course, make sure that this is true.

You can further improve this section by adding emojis.

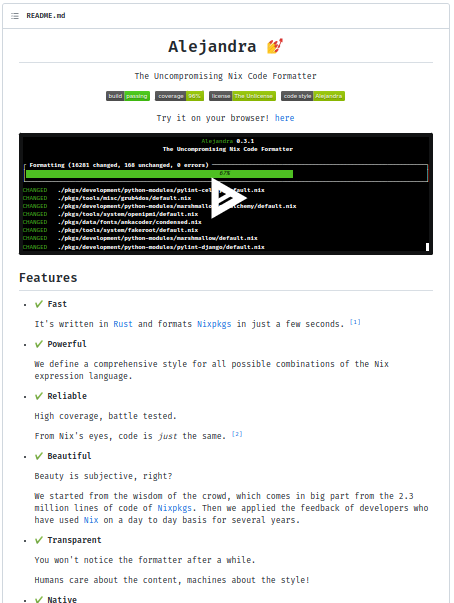

This is an example from the Alejandra project:

To this point, your users should have a clear understanding of:

- What problem does your project solve?

- How does it make the user's life easier?

- What is special about it? What are the key features?

But also make sure not to show unnecessary details. Try to find a balance. Too short and you'll leave questions unanswered. Too long and you'll bore or confuse them.

-

-

Getting Started: Users are interested at this point, they want action now.

Here we place the shortest path a user can take to interact with the project. If there are many, show the fastest/easiest one first, and then slowly introduce them to the more complex ones.

This is an example from the Alejandra project:

It simply tells the user to use a web version of the project. Everybody knows how to click a link, that's easy and nice.

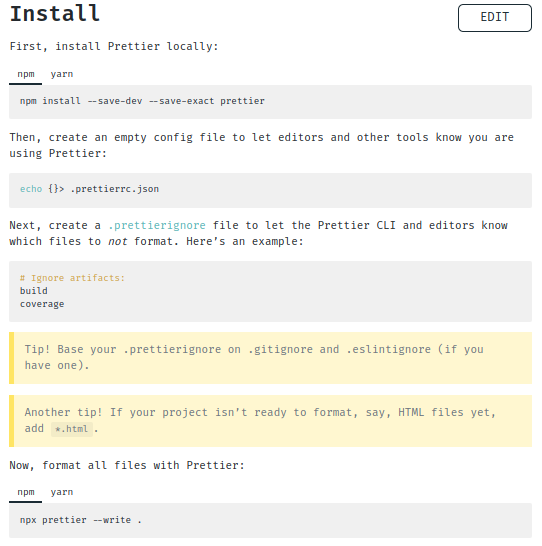

This is an example from the Prettier project:

Users familiar with Node JS will find the instructions easy to follow. However, some steps could have been removed and it would have worked the same. There is no need to overwhelm the user with details at this point. We can introduce the details later.

For example, a better version would be:

Install prettier with:

$ npm install --save-dev prettierMake your code beautiful with:

$ npx prettier --write .Remember to keep the first impression simple and intuitive.

Simplicity is key here.

Altogether, the following are examples of a good README:

-

Prettier:

-

FastAPI:

-

Alejandra:

Lastly, makeareadme.com also offers some templates and tips.

You may want to have a look over there.

A LICENSE.md

This is no legal advice.

For good or bad, all of the code that we write is subject to the following Intellectual Property rights:

-

Copyright: The right to reproduce, derivate, distribute copies, and use the copyrighted work, and to extend these rights to others.

-

Moral Rights: The right of being attributed as the author of the work, and the right to prevent prejudicial distortions of the work (integrity).

Both concepts can be expanded a little bit more by reading the following documents from the U.S. Copyright Office: 1, 2. It is a very interesting topic.

For the time being, think of these rights as a way for the rights holder to decide on who and what can be done with the protected work. Further, without explicit authorization from the rights holder, you are effectively limited by law from doing anything at all with the work, unless you can cite Fair Use.

If you go and read your employee contract, you'll find that the rights over the work you do for your employer are "assigned" to the employer (transferred from you to them), that you won't exercise your Moral Right of integrity over the work, and (with luck) that they'll respect your Moral Right of being attributed, plus many more clauses that are similar in nature.

There is also a third concept called The Public Domain, which are works to which no Intellectual Property rights apply, either because they expired, but usually, because they were expressly waived by the holder.

Whether the existence of these rights is good or bad I leave it for you to think about, but I'll throw some good articles and books here: 1, 2, 3, 4.

At Tech Start UCalgary we commit to Open Source everything under an Open Source Initiative approved license, but we recommend for source code:

- The Unlicense (Public Domain), or

- The MIT License (Permissive Copyright).

And for documentation:

- CC0 1.0 Universal (Public Domain), or

- Attribution 4.0 International (Permissive Copyright).

You can use the following resources to make an informed decision on your project:

We believe the previous licenses are good for the following reasons:

- They enable anyone to benefit from the software, without discrimination, which aligns well with the club's mission, and the fact that we are not seeking profit, but instead, to foster technology.

- It allows you or your team, or anyone in the world, to continue growing the project or creating a company out of it in the future.

Should you not wish to opt-out of the Copyright Monopoly

by releasing your work into the public domain,

remember that the rights are yours and not Tech Start UCalgary.

You haven't signed any Copyright Transfer Agreement

with us (and you won't)

so please use Copyright <year> The <project name>'s contributors,

where the license asks for it.

The projects are yours.

And in order to ensure future contributions adhere to your license, make sure to include the following text somewhere visible in your project, for instance at the end of the README.md:

- All of the code that you submit to our code repository will be licensed under the

<license>.- By submitting code to our code repository you also certify that you agree to the following Developer Certificate of Origin.

This constitutes a very simple Contributor License Agreement.

A RoadMap

A RoadMap is one of the best ways to manifest the future vision of your product and the strategy and steps that will be used for achieving that vision.

A RoadMap is also a great tool to cooperate with other people because you can prioritize on it the steps that are yet to be done, and see who's working on what, what progress has been made, and so on.

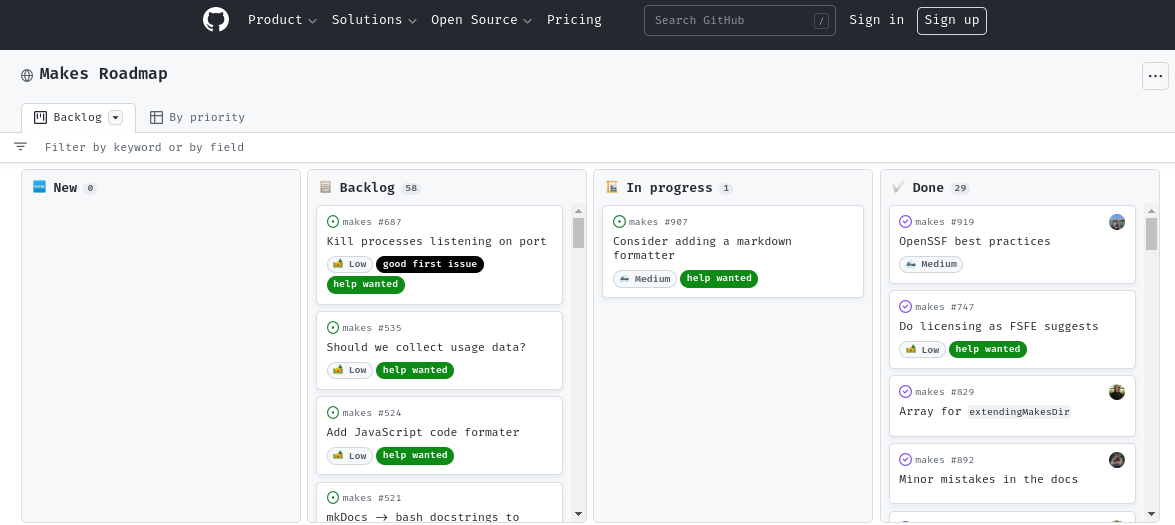

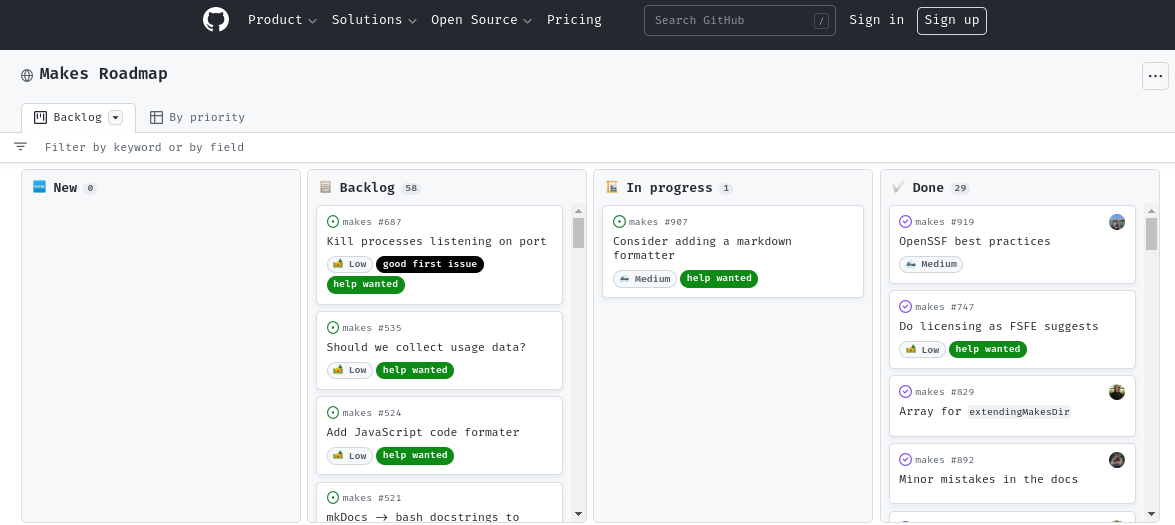

This is an example from the Makes project on GitHub:

That's a simple RoadMap, yet it has all the important information. That's nice!

I highly recommend you create your RoadMap using the Projects feature on GitHub because it integrates very well with other GitHub features like Issues, Pull Requests and Milestones, but it's also fine if you use Jira or Trello.

As a rule of thumb, anything related to making your project awesome can (and should) go into the RoadMap. It's also not limited to just programming tasks, you could perfectly add to the RoadMap a meeting with your users, some market research activity, or having some Pizza with the team.

A RoadMap is also a living creature, it evolves as the project evolves and you can iterate on it on the go. Don't worry if you don't get it perfectly from the beginning, but make sure you have one from the beginning!

How to create a RoadMap?

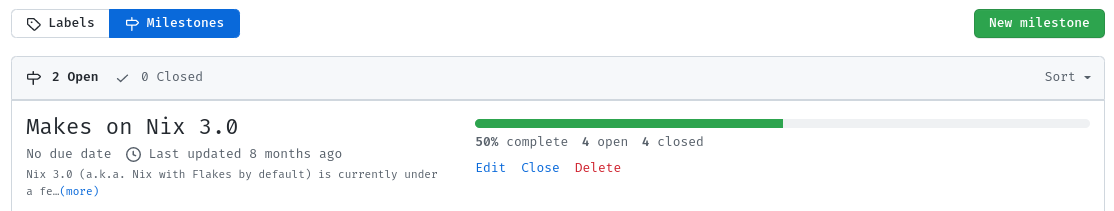

Step 1 - Start with Milestones

My suggestion is that you start by creating a few Milestones.

Think about what your project wants to achieve: Is it maybe a website that allows you to shorten URLs, like https://tinyurl.com?

What's the minimum viable product that you can deliver to your users so that they can start getting a taste?

You are right! An ugly webpage, with an ugly textbox and an ugly button, that when clicked, gives you a beautifully shortened URL :)

But that's even too far in the future. What about making your first Milestone about having an empty webpage? Your second Milestone could be having a backend that answers a ping request. Your third Milestone can be designing the database, such that it stores the shortened URLs; and your fourth Milestone can be adding the textbox and button to the front-end, and an endpoint in the back-end that stores and retrieves the shortened URL.

You see? we just planned a minimum viable product, using an agile methodology.

Congratulations!

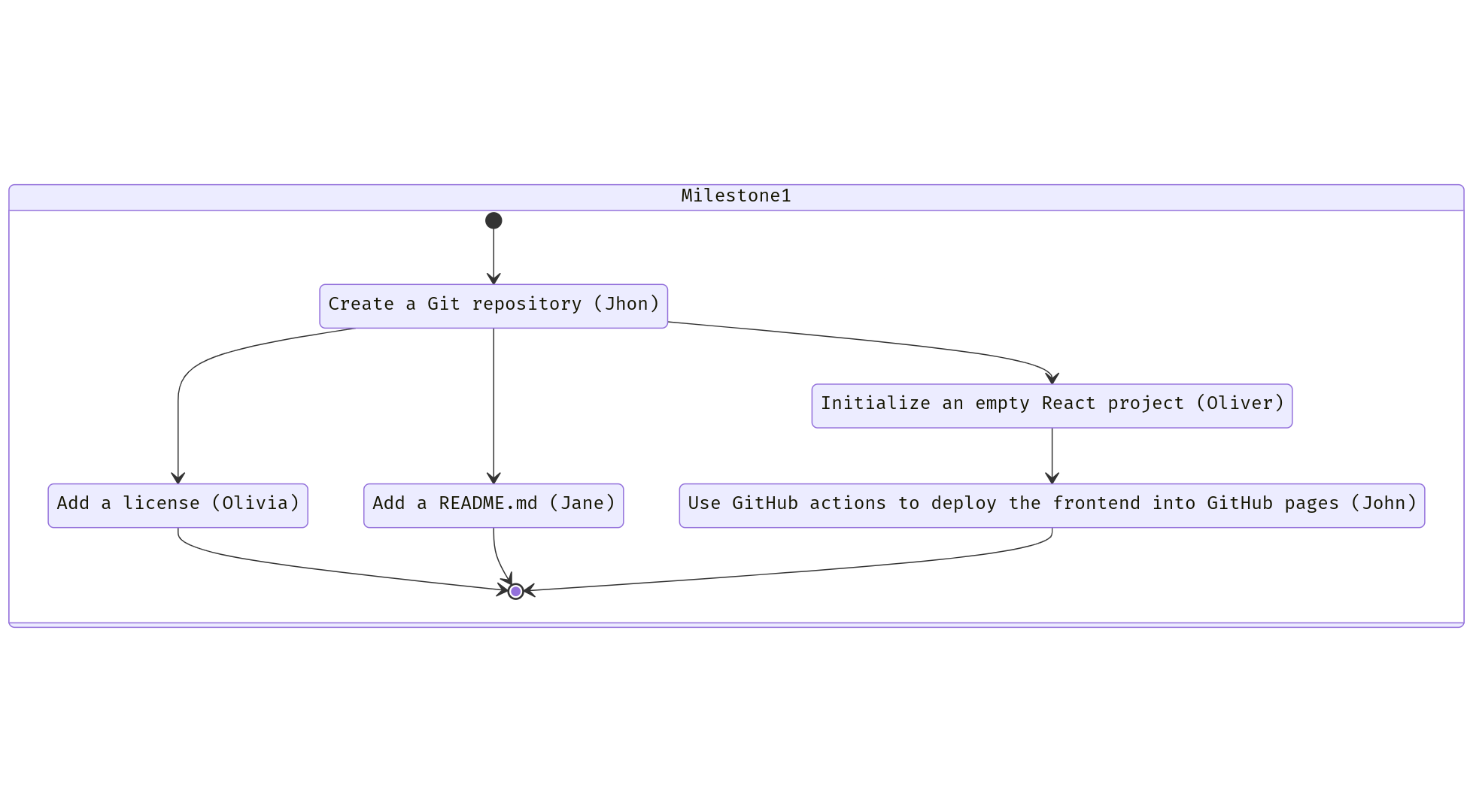

Step 2 - Split the Milestones in smaller Issues

Now we can proceed to define the individual tasks (GitHub Issues) that are needed for completing each Milestone, and most importantly, we can assign each member of our team an Issue, and work collaboratively towards the same goal.

Bonus points if we can plan it so that they can all work in parallel.

For example, for Milestone #1 (Having an empty webpage that the user can access), we could create the following Issues:

In words:

- Issue #1: Create a Git repository.

- Priority: Medium.

- Assignee: John.

- Issue #2, after Issue #1: Add a license.

- Priority: High.

- Assignee: Olivia.

- Issue #3, after Issue #1: Add a README.md.

- Priority: Medium.

- Assignee: Jane.

- Issue #4, after Issue #1: Initialize an empty React project.

- Priority: Medium.

- Assignee: Oliver.

- Issue #5, after Issue #4: Use GitHub actions to deploy the front-end into GitHub pages.

- Priority: Medium.

- Assignee: John. (John should be free, since finishing #4 means #1 was finished).

Boom! Done.

Now create more Issues for all of the other Milestones.

Step 3 - Add your Issues to the RoadMap

My suggestion for this is to keep it simple. Avoid too many labels, statuses and complexity.

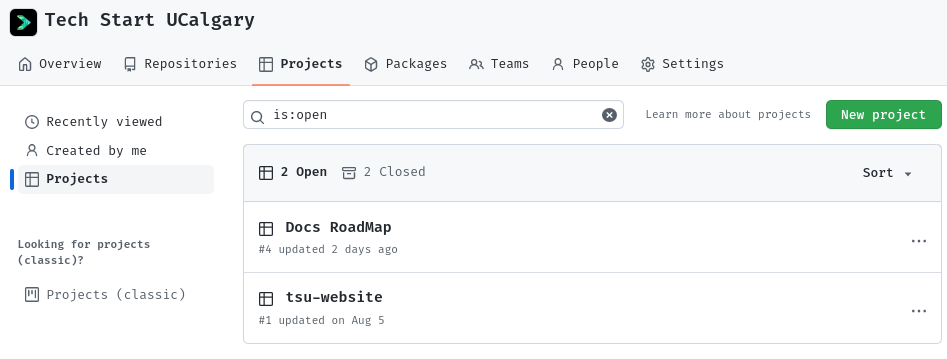

First, create a Project (a RoadMap) on GitHub. This is usually under the Projects tab in the organization view:

Then define some statuses:

- 🆕 New: Where you place all of the issues initially.

- 📋 Backlog: The issues that are yet to be done, and their priority.

- 🏗 In progress: The issues that are being worked on, and who's working on them.

- ✅ Done: The issues that have been shipped.

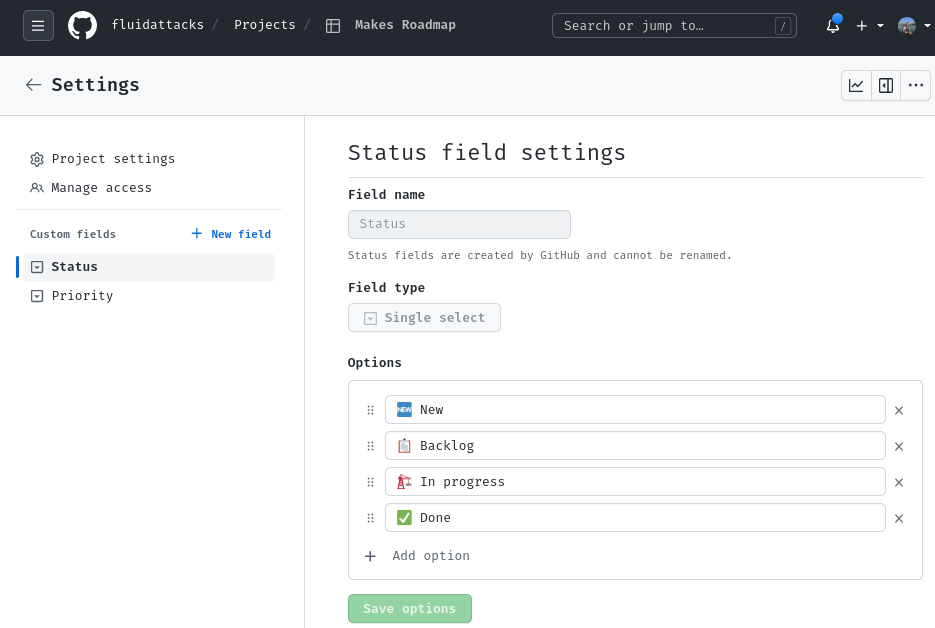

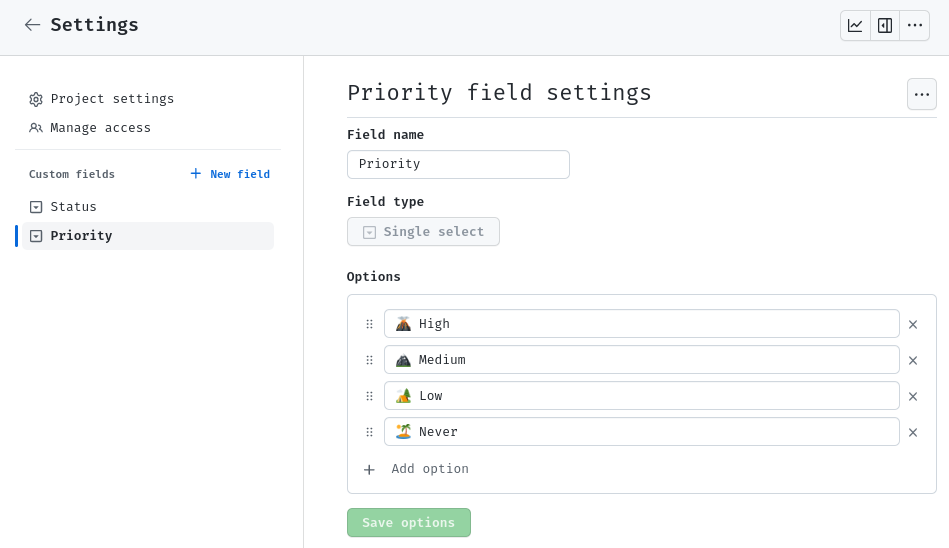

Then, create a priority field:

This will help your team focus on the things that are more impactful to your project.

You can use the Eisenhower matrix for this:

- 🌋 High priority: Issues that are important and urgent.

- 🏔 Medium priority: Issues that are important, but not urgent.

- 🏕 Low priority: Issues that are not important, but may be nice to have someday.

- 🏝 Never priority: Just close the issue :) don't waste your time on this.

Now you can start adding Issues to your RoadMap:

And see what is the progress towards each Milestone, for example:

Career Paths

There are several areas of software development that cover different aspects of the software development life cycle. Usually, people focus on only one or a couple of them. Each of them is a big area on its own and takes years to learn:

-

Front-end development: This area of software development is concerned with the user interface (UI) and user experience (UX) of software applications. Front-end developers work with technologies like HTML, CSS, and JavaScript to create the visual elements that users interact with.

-

Back-end development: This area of software development is concerned with the server-side of software applications. Back-end developers work with technologies like PHP, Python, Ruby, and Java to create the logic that powers software applications.

-

Full-stack development: Full-stack developers work on both the front-end and back-end of software applications. They have knowledge and skills in both areas of development and can build complete applications from start to finish.

-

Mobile development: This area of software development is concerned with creating applications for mobile devices. Mobile developers work with technologies like Java, Swift, and Kotlin to create native apps for iOS and Android devices.

-

DevOps: DevOps is a methodology that focuses on collaboration between development and operations teams to automate the software delivery process. DevOps engineers use tools like Jenkins, Ansible, and Docker to automate the software development process.

-

Testing and quality assurance: This area of software development is concerned with ensuring that software applications are reliable, bug-free, and meet the requirements of the end-users. Testing and quality assurance engineers use tools like Selenium, JMeter, and LoadRunner to test software applications.

-

Data science and analytics: This area of software development is concerned with creating applications that analyze and interpret data. Data science and analytics engineers work with technologies like Python, R, and SQL to build applications that can perform data analysis, machine learning, and statistical modeling.

-

Artificial intelligence and machine learning: This area of software development is concerned with creating applications that can learn and make decisions based on data. AI and ML developers work with technologies like Python, TensorFlow, and PyTorch to build applications that can perform tasks like natural language processing, image recognition, and predictive modeling.

-

Cybersecurity: This area of software development is concerned with creating secure software applications and protecting them from malicious attacks. Cybersecurity engineers work with technologies like encryption, firewalls, and intrusion detection systems to secure software applications.

-

Cloud computing: This area of software development is concerned with creating applications that can run on cloud computing platforms like Amazon Web Services, Microsoft Azure, and Google Cloud Platform. Cloud developers work with technologies like containerization, serverless computing, and cloud storage to build and deploy applications on the cloud.

-

Embedded systems: This area of software development is concerned with creating software for embedded devices like medical devices, smart appliances, and automotive systems. Embedded systems developers work with technologies like C, C++, and assembly language to create software that runs on these devices.

-

Augmented and virtual reality: This area of software development is concerned with creating applications that allow users to interact with virtual or augmented environments. AR and VR developers work with technologies like Unity, Unreal Engine, and WebXR to build immersive applications for gaming, education, and training.

-

Project management: Involves overseeing the entire software development process from conception to delivery. Project managers are responsible for ensuring that the project is completed on time, within budget, and to the required quality standards.

-

Product owner: The product owner is responsible for defining and prioritizing the features and requirements of a software application. They work closely with development teams, stakeholders, and customers to ensure that the product meets the needs of the users.

-

Technical writing: Technical writers create documentation and user manuals for software applications. They work with developers and other technical experts to create user-friendly documentation that explains how to use the software application.

-

User experience (UX) design: UX designers create the visual design and user experience of software applications. They work with developers to ensure that the application is easy to use and visually appealing.

-

Technical support: Technical support engineers provide support to users who are experiencing issues with software applications. They work with developers to diagnose and resolve technical issues, and provide customer support to users.

-

Technical architecture: Technical architects are responsible for designing the technical architecture of software applications. They work with development teams to ensure that the architecture meets the scalability, security, and performance requirements of the application.

-

Database administration: Database administrators are responsible for managing the data that is used by software applications. They work with developers to design and implement databases, and ensure that the data is stored and managed effectively.

Front End

A front-end engineer is a software engineer who specializes in building the user interface (UI) and user experience (UX) of a website or application. They are responsible for creating visually appealing and intuitive designs that allow users to interact with the application or website seamlessly.

The front-end engineer's job involves using various technologies such as HTML, CSS, and JavaScript to build the visual elements of an application or website. They work closely with designers to turn mockups and wireframes into fully functional websites or applications that are easy to use and navigate.

Front-end engineers also collaborate with back-end engineers to integrate the front-end code with the back-end code, ensuring that the entire system functions correctly. They are responsible for testing and debugging the front-end code, optimizing the code for performance, and ensuring that the website or application is compatible with different browsers and devices.

How to become a front-end engineer?

To become a front-end engineer, you will need to follow these general steps:

-

Learn HTML, CSS, and JavaScript (or TypeScript): HTML is used to structure web pages, CSS is used to style them, and JavaScript is used to add interactivity and dynamic behavior. These are the core technologies used in front-end development.

-

Learn a front-end framework: While it's not strictly necessary, learning a front-end framework like React can help you to build more complex web applications more efficiently.

-

Build projects: To gain practical experience, you should work on building your own projects or contributing to open-source projects. This will help you to develop your skills and build a portfolio of work that you can show to potential employers.

-

Stay up-to-date: Front-end development is a rapidly evolving field, so it's important to stay up-to-date with the latest technologies and best practices. Attend conferences, read blogs and forums, and participate in online communities to stay current.

-

Apply for jobs: Once you have the skills and experience, it's time to start applying for front-end engineering jobs. Look for job postings online, reach out to recruiters or companies directly, and attend networking events to make connections in the industry. Be prepared to showcase your portfolio and be able to talk about your skills and experience during interviews.

DevOps

DevOps is a software development approach that emphasizes collaboration and communication between software developers and IT operations professionals. The goal of DevOps is to improve the efficiency and quality of software development by breaking down silos and promoting a culture of collaboration, automation, and continuous improvement.

Traditionally (https://clickup.com/blog/waterfall-project-management/), software development and IT operations have been two separate functions within organizations, with little interaction between them. DevOps seeks to bridge this gap by fostering collaboration between these teams throughout the entire software development lifecycle, from planning and development to deployment and maintenance.

DevOps involves using tools and practices such as continuous integration, continuous delivery, and infrastructure automation to streamline the software development process and make it more efficient. By automating tasks like testing, building, and deploying software, teams can reduce errors, speed up the development process, and deliver software more quickly and reliably.

Overall, DevOps is a holistic approach to software development that aims to create a culture of collaboration and continuous improvement, with the goal of delivering software more efficiently and with higher quality.

How to become a DevOps engineer?

-

Understand the why and the high-level how (the philosophy).

For instance:

- Get to know The Three Ways: https://itrevolution.com/articles/the-three-ways-principles-underpinning-devops/.

- Read a book: https://www.amazon.com/DevOps-Handbook-Second-World-Class-Organizations/dp/B09L56CT6N.

- Win in this game: https://devops.games/.

-

Learn the core skills: DevOps engineers need a strong foundation in software development, You should have a basic understanding of programming languages, and be familiar with version control systems like Git. You should also have experience working with Linux/Unix systems and be familiar with cloud platforms like AWS, Azure, or Google Cloud Platform.

-

Gain experience with DevOps tools and practices: DevOps engineers should be familiar with tools and practices such as continuous integration/continuous delivery (CI/CD), infrastructure as code, configuration management, and monitoring and logging. You can gain experience by working on projects, contributing to open-source projects, or taking online courses.

-

Learn automation: Automation is a key part of DevOps, so you should have experience with containerization tools like Docker and Kubernetes.

-

Build projects: To gain practical experience, you should work on building your own projects or contributing to open-source projects. This will help you to develop your skills and build a portfolio of work that you can show to potential employers.

-

Stay up-to-date: DevOps is a rapidly evolving field, so it's important to stay up-to-date with the latest tools and practices. Attend conferences, read blogs and forums, and participate in online communities to stay current.

-

Apply for jobs: Once you have the skills and experience, it's time to start applying for DevOps engineering jobs. Look for job postings online, reach out to recruiters or companies directly, and attend networking events to make connections in the industry. Be prepared to showcase your portfolio and be able to talk about your skills and experience during interviews.

Technical Demos

Overview

At some point in your career you will need to demo some work you've done to both technical and non-technical stakeholders (people with an interest in the work you're doing).

When you do this, you want to make sure you give yourself and your team the best opportunity to show off your hard work. Here are some tips and ideas to think about when preparing, and do prepare if it's for anyone other than your own team.

Purpose

The work you did will have achieved something, whether it's fixing a bug, solving a problem for your users, or presenting an entirely new idea.

It's important to show this if you want to convince others that your work is important. Feel free to show the existing problem and then go into how you have addressed it, this will make the change that much more impactful.

Presentation

Live demos are always best, it proves to your audience that it actually works and that there's no smoke and mirrors hiding flaws. However, there are cases where live demos don't work out. Maybe you have a very slow running process, or your code just decides it's not going to work that day.

For slow processes, explain that it's slow and why, and let the audience know how you've addressed it in the demo to set the right expectations.

Here are some methods:

- Run the process before starting the demo in another tab/instance/device, and switch to that when you need to show what happens after it.

- Do something else while it's running, e.g. talk about some other detail in your presentation.

- Pre-record the whole demo and speed up or cut out the slow parts.

It's a good idea to have a well-edited pre-recorded demo to switch to as well, sometimes code just decides not to work!

Make sure you've practiced talking over the pre-recorded demo as well as the live demo so you're prepared for all cases, including switching from live to recorded halfway through.

Avoid Distractions

It's not the end of the world, but a notification popping up on your screen is distracting to the audience.

It's best to fully close all unneeded apps, turn on do-not-disturb, and full-screen what you're presenting.

This also goes for browser tabs and bookmarks, have everything as clean and empty as possible so all the focus is on what you're presenting.

Use Virtual Desktops on Windows and full-screen apps on MacOS. Place your presentation in one, and the demo in another, that way you can quickly and seamlessly switch between the two without alt-tabbing or exiting the presentation.

Prepare

Most importantly, rehearse the demo! Go through everything you want to show off, and have a good idea of what you want to say. It doesn't need to be perfectly memorized, but you should know exactly what you're doing next all the time. If you're working with a team, and one person is speaking and the other controlling the demo, make sure both of you are in sync. If you say "looking at the user profile page", the demo should immediately go there, without you having to ask your partner to do things which breaks the flow.

Finally, if (and when) something goes wrong, the best thing to do is to move past it. Bringing more attention to an issue can make it seem worse than it is. If things are really bad (app fully crashes, laptop catches fire), you have a recorded demo to switch to. Right?

References/Further Reading

How to create great tech demos and presentations - Thomas Maurer

13 Demoing Strategies That Make Tech Software Compelling

Tech Start's Django Guide

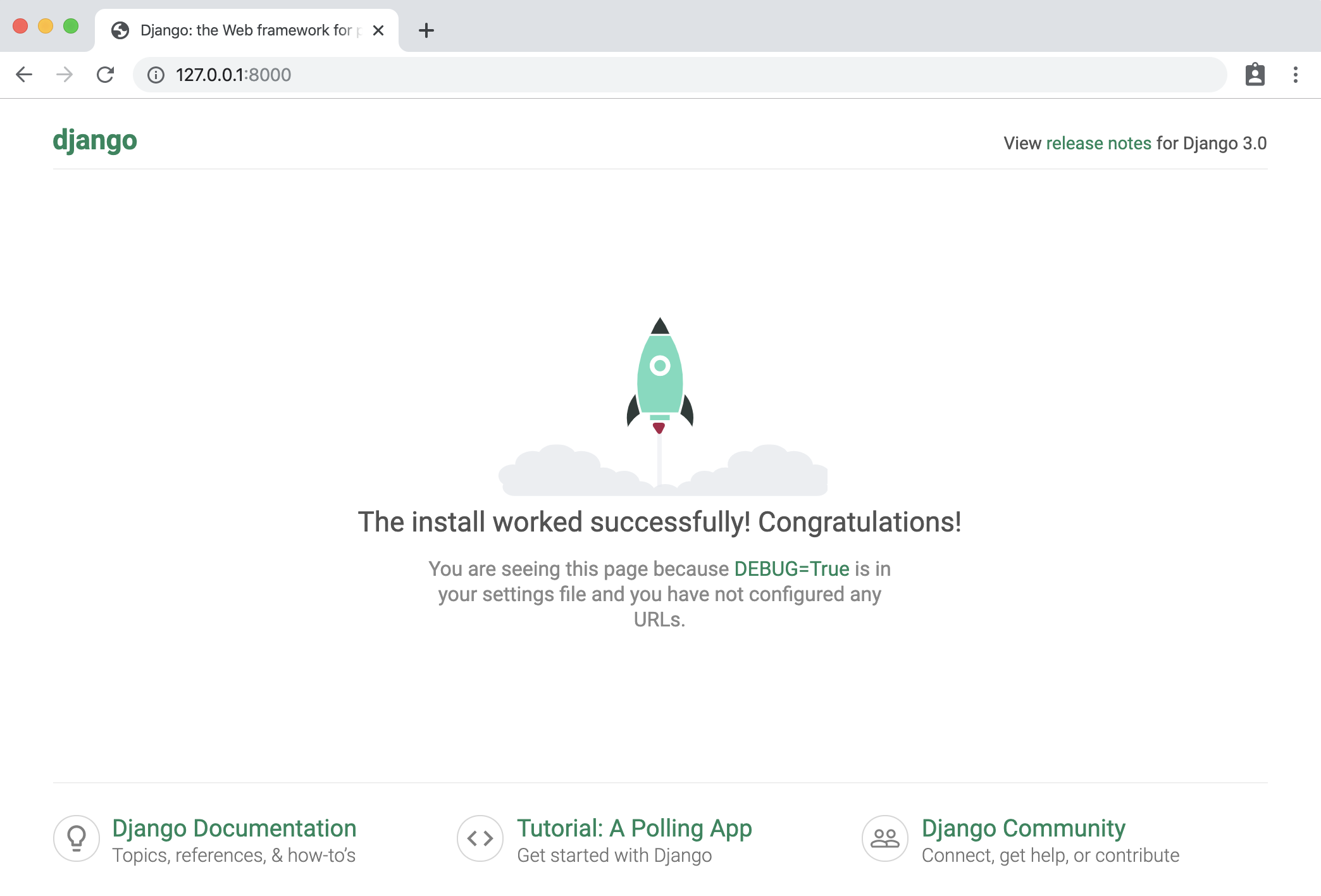

Django is a free and open source python framework that lets you build awesome backends for websites, apps, and more. You can use it to host databases and build secure APIs for them without writing a line of SQL. You can also use it to create multi-page applications with dynamically served content. It makes it easy to get started building complex functionalities by automating a lot of the boilerplate code that you'd normally have to write.

We recommend that you have a basic knowledge of python before using django! This will help you debug any errors that you get.

(Img src: https://medium.com/crowdbotics/when-to-use-django-and-when-not-to-9f62f55f693b)

Table of Contents

- Requirements

- Setup

- Installing Dotenv

- Installing Postgres and psycopg2

- Creating a Postgres database

- Connecting Django to Postgres

- Writing Models

- Run your app

- URLs

- Views

- Django REST Framework

- Serializers

- Django REST Framework: Class based Views

- Testing your API

Requirements

First you will need to install Homebrew, Python, Pip, and Pyenv. If you have not done so already, please follow the instructions in the Installfest section.

Setup

To create a Django project first wee need to create a directory for the project. To do so, run the following command:

mkdir <project-name>

Then, we need to navigate to the directory we just created. To do so, run the following command:

cd <project-name>

Now, we need to create a virtual environment for our project. To do so, run the following command:

pyenv virtualenv .venv

Then, we need to activate the virtual environment. You need to do this every time you want to run your project. To do so, run the following command:

source .venv/bin/activate

If you want to deactivate your virtual environment when you're done working on your project, run the following command:

deactivate

Now, we need to install Django. To do so, run the following command:

pip install django

To check if Django is installed, run the following command:

python3 -m django --version

Next, let's create a project.

django-admin startproject <project-name> . # the dot is important! it will create the project in the current directory

Good to know: Projects vs. apps

What's the difference between a django project and a django app? An app is a Web application that does something – e.g., a Weblog system, a database of public records or a small poll app. A project is a collection of configuration and apps for a particular website. A project can contain multiple apps. An app can be in multiple projects.

python3 manage.py startapp <your-app-name>

This creates an app within your project. You can create as many apps as you want within a project.

Next step: include your app in the INSTALLED_APPS fields in settings.py (just the name)

INSTALLED_APPS = [

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

...

'your-app-name',

]

Installing Dotenv

Dotenv is a zero-dependency module that loads environment variables from a .env file into process.env. Storing configuration in the environment separate from code is based on The Twelve-Factor App methodology. To install dotenv, run the following command:

pip install python-dotenv

Then freeze the requirements. To do so, run the following command:

pip freeze > requirements.txt

This will create a file called requirements.txt that will contain all the packages that are installed in your virtual environment. This file will be useful for when you need to install the same packages in another virtual environment. After adding a new package to your virtual environment, you will need to freeze the requirements again.

Next, go to your project and create a .env file. To do so, run the following command:

touch .env

Then go to your settings.py file and add the following code:

from django.core.management.utils import get_random_secret_key

from dotenv import load_dotenv

...

load_dotenv()

# SECURITY WARNING: keep the secret key used in production secret!

# Copy the secret key from the .env file

SECRET_KEY = os.getenv("DJANGO_SECRET_KEY", get_random_secret_key())

Your .env file should look like this:

DJANGO_SECRET_KEY=your-secret-key

Installing Postgres and psycopg2

PostgreSQL is a powerful, open source object-relational database system with over 30 years of active development that has earned it a strong reputation for reliability, feature robustness, and performance. To install Postgres, run the following command:

brew install postgresql

To check if Postgres is installed, run the following command:

postgres --version

psycopg2 is a PostgreSQL database adapter for the Python programming language. To install psycopg2, run the following command:

pip install psycopg2

Then freeze the requirements. To do so, run the following command:

pip freeze > requirements.txt

Then go to your settings.py file and add the following code:

INSTALLED_APPS = [

...

'psycopg2',

]

Creating a Postgres database

To create a Postgres database, run the following command:

createdb <database-name>

Remember your credentials for the database. You will need them later.

It is also recommended to install pgAdmin, a free and open-source administration and development platform for PostgreSQL and its related database management systems. To install pgAdmin, run the following command:

brew install --cask pgadmin4

Connecting Django to Postgres

Add the following code to your .env file:

DATABASE_NAME=<database-name>

DATABASE_USER=<database-user>

DATABASE_PASSWORD=<database-password>

Now go to your settings.py file and add the following code:

import os

...

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql',

'NAME': os.getenv('DATABASE_NAME'),

'USER': os.getenv('DATABASE_USER'),

'PASSWORD': os.getenv('DATABASE_PASSWORD'),

'HOST': "127.0.0.1",

'PORT': "5432",

}

}

Writing Models

Models allow you to define the content of your database. If you don't need content in your database, you won't need models.

You can follow along with this section here:

https://docs.djangoproject.com/en/3.1/intro/tutorial02/

More about models: https://docs.djangoproject.com/en/3.1/topics/db/models/

You will define all your models in models.py, located within the folder for your app.

from django.db import models

# Create your models here.

class Album(models.Model):

name = models.CharField(max_length=200)

artist = models.CharField(max_length=100)

year_released = models.DateField()

def __str__(self):

return str(self.name)

class Song(models.Model):

song_name = models.CharField(max_length=100)

album = models.ForeignKey(Album, on_delete=models.CASCADE)

num_streams = models.IntegerField()

def __str__(self):

return str(self.song_name)

Each model should correspond to the structure of a table of a relational model of a database. If you don't know what this means, ask someone who has taken CPSC 471 (or an equivalent databases course)

Django can convert these into real SQL tables!

- Good to know: Primary Keys: In the above example we didn't specify any ids for our models (normally, with databases, you want an id to be your primary key). Django automatically creates an ID field to be the primary key for each model and takes care of auto-incrementing, unless you specifically override it. I don't recommend overriding, it's not worth the effort (and its doubly complicated and not worth it to have a primary key composed of several fields)

- Good to know: str: the str function is Python's default function for string representation. In this case, it's good practice to override this for your models. This will help you understand your data if you login via the admin view (I'll show how to do this later)

- Good to know: Foreign Keys: See the Song model class for how you can reference a foreign key belonging to another model (in this case it refers to Album). You don't need to refer to a foreign model's keys directly, all you need to do is specify which table you are referencing. Also note: if you are referring to a table, it needs to be defined above the point in the code where you are referring to it.

There are more options that can be explored about how you can define your models, but this should be a good base for you to do your own research :)

Now we're ready to convert these into a real database! By default, Django will make a migration file that has your database.

Converting models into your database

https://docs.djangoproject.com/en/3.1/intro/tutorial02/

>> python3 manage.py makemigrations appName

Creates migrations for the changes you made in appName

>> python3 manage.py migrate

Migrates the changes you made into your database

Run your app

Whenever you are ready to run your server, just call this command!

python3 manage.py runserver

You should see something like this:

By default, this will run the Django server on localhost:8000. View the django documentation to see how you can run it on a different port. You can now access it from your web browser by visiting http://localhost:8000 !

You can also create a superuser (admin) to view the inner contents of your database. To do this, you first need to create them from the command line using the following command:

python3 manage.py createsuperuser --username yourNameHere --email yours@email.ca

This will create a super user with your provided info (it will prompt you to enter a password as well).

The following command creates a token for the superuser that you can use for authentication in requests. If you are not using Django Rest Framework, this is not applicable to you.

python3 manage.py drf_create_token yourSuperUserName

Note: if you're trying to run these for your deployed app on heroku, you need to prepend heroku run before those commands! See the Heroku section for a description on how you can deploy it.

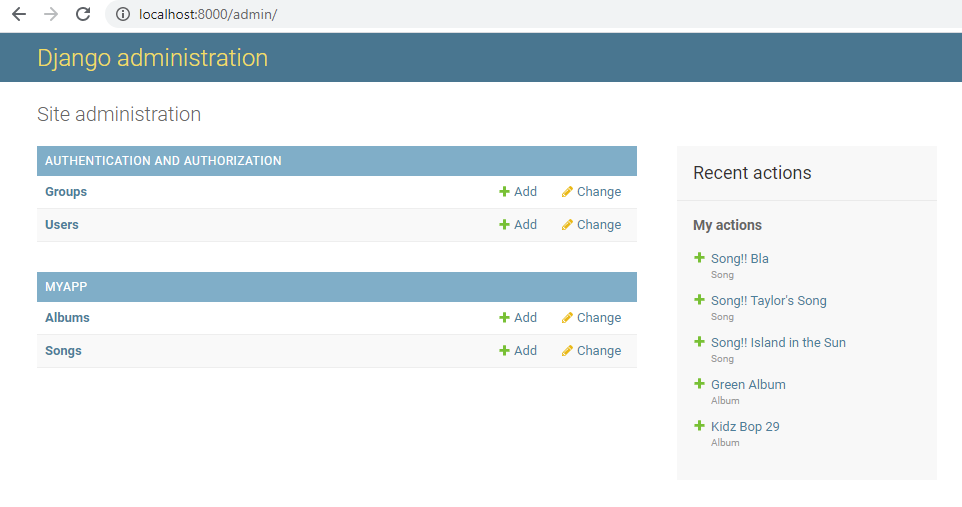

You can see the admin page of your website to view the inner content of your database. This is automatically created by Django. Visit http://localhost:8000/admin and enter your passcode.

If you want your models to show up in the admin page, you will need to specify them in admin.py like this:

from django.contrib import admin

from .models import Album, Song

# Register your models here.

admin.site.register(Album)

admin.site.register(Song)

Once you log in to the admin site, you should see something like this. From here, you can add & remove database entries.

URLs

URLs allow you to define the paths that exist in your system, and what happens when you call them.

URLs documentation: https://docs.djangoproject.com/en/3.1/ref/urls/

How URLs are processed in Django: https://docs.djangoproject.com/en/3.1/topics/http/urls/#how-django-processes-a-request

Read more: https://docs.djangoproject.com/en/3.1/intro/tutorial03/

If you're constructing a big application, it's standard practice in django to include different _apps _for each part of your system, and link them to the main project.

However, since we're only making small-scale side-projects, it's fine to ignore this best-practice and include everything in a single app. Just understand that in a large industrial scale project you wouldn't necessarily want to do this.

// urls.py in a project:

from django.contrib import admin

from django.urls import path, include

urlpatterns = [

path('admin/', admin.site.urls),

path('myApp/', include('myApp.urls'))

]

// example urls.py in myApp folder:

from django.urls import path

from . import views

urlpatterns = [

path('hello_world', views.ping, name='Hello World!'),

path('hello-x/<str:hello_to>', views.hellox, name='Hello to x'),

path('hello-x/<print_me>/print', views.printx, name='Print this!'),

path('goodbye', views.goodbye, name='goodbye'),

]

Now you can visit a path using http://localhost:8000/myApp/hello-world, for example.

Views

**Views **allow you to define what happens when you access a certain url in your system (using your browser, an API tool like Postman, or something else altogether). In your views, you could define interactions with the model (your database) or entirely different interactions altogether. You can use the definition of the view to call external processes.

If you want to make more complicated views and understand the Request and Response items, read this:

https://docs.djangoproject.com/en/3.1/ref/request-response/

To understand views more in-depth, read the documentation: https://docs.djangoproject.com/en/3.1/topics/http/views/

Here are some simple examples of what you can do with a view. Note that these are just examples and don't represent best practice at all.

from django.http import HttpResponse, response

# views.py

def ping(request):

myRes = "Hello World!"

return HttpResponse(myRes)

def hellox(request, hello_to):

myRes = {"My Reply": "Hello " + hello_to}

return response.JsonResponse(myRes)

def printx(request, print_me):

print("Hello to " + print_me)

return response.HttpResponseNotFound("I printed it!")

def goodbye(request):

if not (request.method == 'GET'):

return response.HttpResponseBadRequest()

queryParams = request.GET

msg = queryParams.get('msg', "Gamers")

return response.JsonResponse({"Reply": "Goodbye " + msg})

Now, we want to adhere to DRY (Don't repeat yourself) when creating views. Therefore, it is almost always best to define your views as Class-Based views (CBVs) which handle more of the boiler plate code for you and help ensure your views follow standards.

Please read more about class-based views here: https://docs.djangoproject.com/en/3.1/topics/class-based-views/

Both the above docs and the docs for views also show how you can interact with your database items through a view. But, if you're building an API, I highly recommend using the tools in the following section: Django REST Framework.

Once you have defined your views and given them a corresponding url, you can test them out.

python3 manage.py runserver

Run your server, and using either a web browser, or preferably an API testing tool like Postman (https://www.postman.com/) access the proper urls (ex. http://localhost:8000/myApp/hello_world) to see if they have the expected behavior.

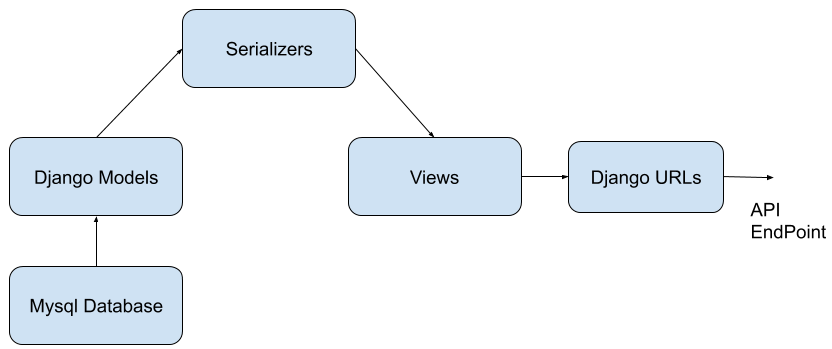

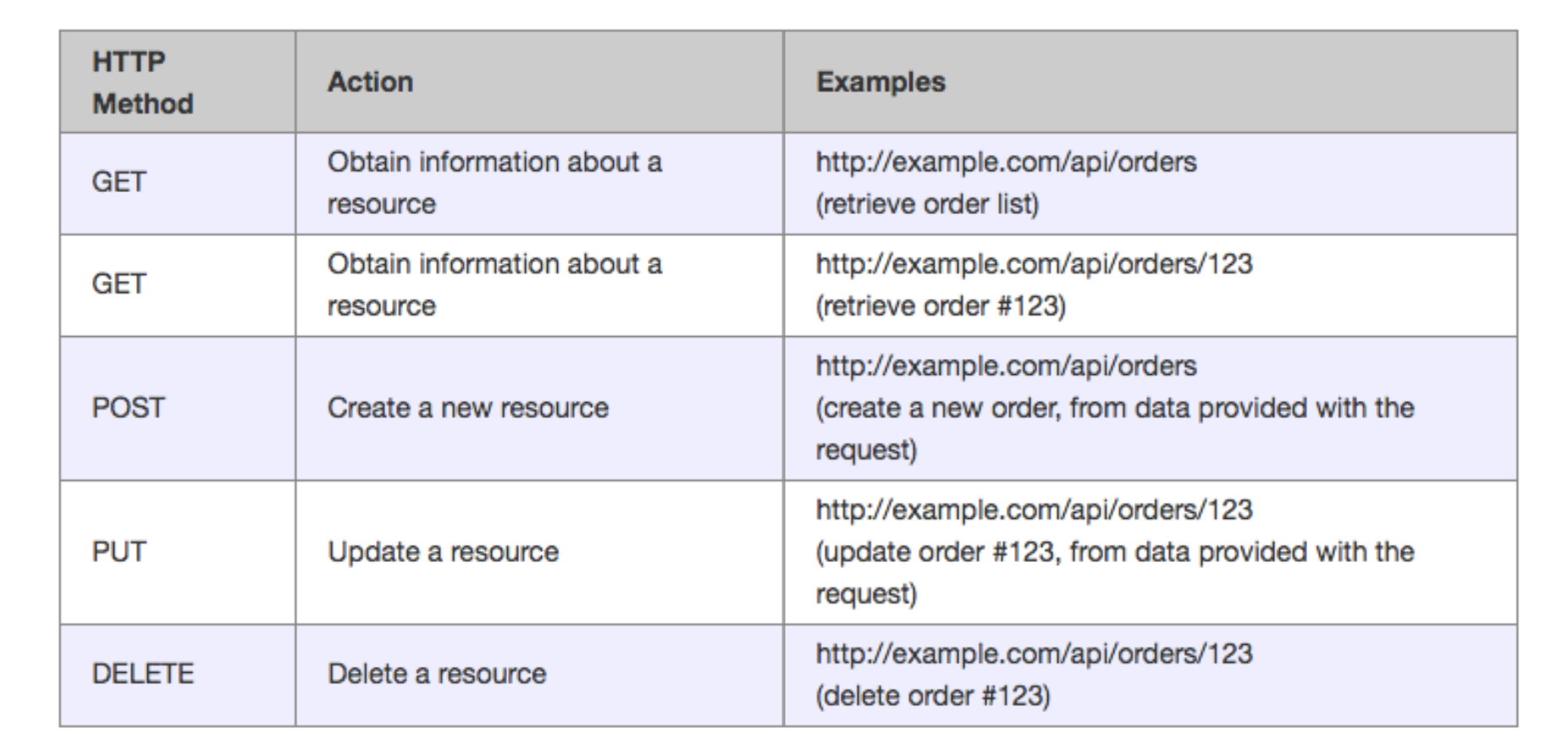

Django REST Framework

Django REST Framework is an add-on to Django that makes it simple to develop REST-compliant APIs. There is great documentation here: https://www.django-rest-framework.org/ <--- FOLLOW INSTALL INSTRUCTIONS

What is a RESTful framework? Learn more here: https://restfulapi.net/

Django REST Framework provides you with tools to make class-based views to easily implement database CRUD (Create Read Update Destroy) operations, as well as define more complex operations.

Before we define any endpoints with Django REST Framework, let's make some serializers.

Serializers

Django REST Framework uses serializers as a way to perform **translation **of your models from your python code and your database into data formats like JSON and XML that an API might use. Read more about them here:

https://www.django-rest-framework.org/api-guide/serializers/

We should define some basic serializers so that we can make API endpoints that interact with the content of our database models.

- Create a new file called serializers.py inside the app you want to use serializers with.

- Create your serializers. Give them a relevant name (though the exact syntax is not important)

- List the fields that you want your serializer to translate. If you don't want it to translate a particular field, then don't include it.

Here's an example, using the Song and Album models we defined earlier. Here's what's at the top of serializers.py:

from rest_framework import serializers

from .models import *

class SongSerializer(serializers.ModelSerializer):

class Meta:

model = Song

fields = ("id", "song_name", "num_streams")

class AlbumSerializer(serializers.ModelSerializer):

class Meta:

model = Album

fields = ("name", "year_released", "artist", "id")

Make sure your fields match exactly the names that you used in your models.

You may be curious why I also included an id, when we didn't define one in our models- this is because Django auto generated an id for us in this models because we didn't specify a primary key. This id field always has the name id. It is often useful for our API, so we'll include it.

We can also create multiple serializers for the same models, if we wanted different behavior. For example, what if we wanted to include the album id of the song?

class SongSerializerWithAlbumId(serializers.ModelSerializer):

class Meta:

model = Song

fields = ("id", "song_name", "num_streams", "album")

This would include the album's PK (in this case, it's id, but if the PK was different, it'd be something else).

What if we wanted to include the full album info when an api request was made to see the song? Here's another example serializer that we could make:

class SongSerializerFullAlbum(serializers.ModelSerializer):

myFullAlbumDesc = AlbumSerializer("album", read_only=True)

class Meta:

model = Song

fields = ("id", "song_name", "num_streams", "myFullAlbumDesc")

It's using our album serializer from earlier to serialize a field, which must (read only is an optional parameter that makes it so that it's only included in reading requests, not create/update/destroy.)

This was just an introduction to serializers. If you want to use more complex behaviors, you'll have to do the research on your own.

Django REST Framework: Class based Views

Pre-requisite to this section: understand URLS and views in vanilla Django, and read the serializers section

More reading: https://www.django-rest-framework.org/tutorial/3-class-based-views/

Video overview of similar topic: https://www.youtube.com/watch?v=akvFA5VMXJU

You can use Django's Class Based Views to quickly create views that can do CRUD (Create, Read, Update, Destroy) operations on your database.

In views.py:

from rest_framework.views import APIView

from rest_framework import generics

from rest_framework import status

from .models import *

from .serializers import *

Some class based views that we'll define. Right now these are just the generic create, read, update, destroy views. By defining these views with the classes, Django REST Framework takes care of the default behavior for us. It's that easy!

class SaveSong(generics.CreateAPIView):

queryset = Song.objects.all()

serializer_class = SongSerializerWithAlbumId

class GetSongs(generics.ListAPIView):

queryset = Song.objects.all()

serializer_class = SongSerializer

class DeleteSong(generics.DestroyAPIView):

queryset = Song.objects.all()

serializer_class = SongSerializer

class UpdateSong(generics.RetrieveUpdateAPIView):

queryset = Song.objects.all()

serializer_class = SongSerializerWithAlbumId

Notice that we need to make the create and update serializers include the album ID- if we didn't then you couldn't create song objects since their album id must be _not null._This same principal applies to any model that has a foreign key which isn't allowed to be null.

Before we can use the views we created, we need to hook them up to a URL, just like you would for any other view. Do keep in mind that we need to call the as-view function on them, though. Here is an example of the URLs for the previous views. This pattern is how we normally define CRUD endpoint urls for any entity in a database

path('song', views.GetSongs.as_view(), name='songs'),

# Create a song

path('song/create', views.SaveSong.as_view(), name='Save Song'),

#Updates a specified license with information

path('song/<int:pk>', views.UpdateSong.as_view(), name='Update Song'),

# Deletes a song specified by pk

path('song/<int:pk>/delete', views.DeleteSong.as_view(), name='Delete Song'),

If you are using a pk that is not an int (you manually defined a pk instead of using the default id generated), you'll have to specify that accordingly.

What if we want more complex behavior beyond the default predefined classes? We can modify them to add more conditions to what is returned.

In this example, we added an optional way to filter songs by album, using a query_param called album. You'll need to read documentation and tutorials if you want to know more about the custom behavior you can define within your Django REST Framework views.

class GetSongInAlbum(generics.ListAPIView):

serializer_class = SongSerializer

def get_queryset(self):

queryset = Song.objects.all()

alb = self.request.query_params.get('album', None)

queryset = queryset.filter(album=alb)

return queryset

If you have a view that isn't necessarily linked to CRUD actions, or has more complex usage and needs more custom defined behavior, you can use APIView.

Test out your Django REST API

Compile and run your app with

python3 manage.py runserver

Use your bugfixing wizardry to fix any errors that might show up. Now you should be ready to give those predefined endpoints you made for a spin!

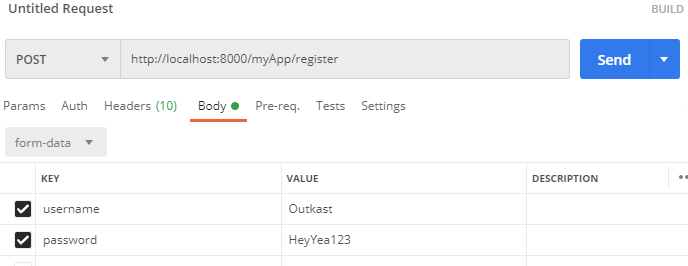

Here's some examples that I did using Postman for API testing. If you used Django REST Framework, it should also come with a built-in API testing tool that you can use in your browser.

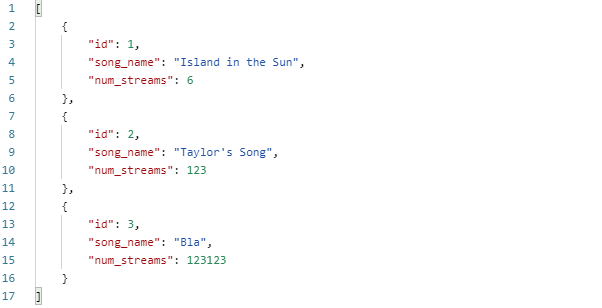

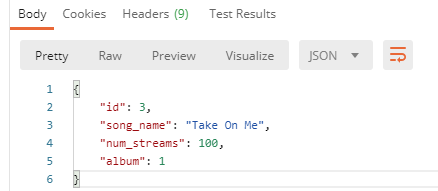

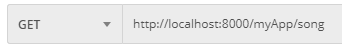

Here's a simple GET request. This is a database read operation, and it's pretty simple. Your browser is making GET requests to every URL you visit while you surf the web.

| Request |

|

| Response |

|

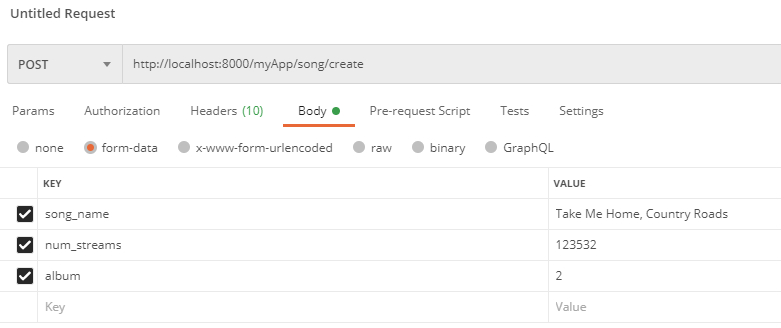

Here's a POST request (it's post because we're _creating _or Posting new data) to our create route. We should include the key-value pairs for the song we want to create in the **_Body _**of our request.

| Request |

|

| Response |

|

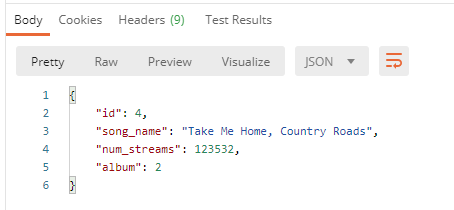

To update, let's follow the URL pattern we defined with the pk of the song we want to update. We can use PUT or PATCH. The info you're sending should be in the _Body _of the request, just like it was for our POST request.

| Request |

|

| Response |

|

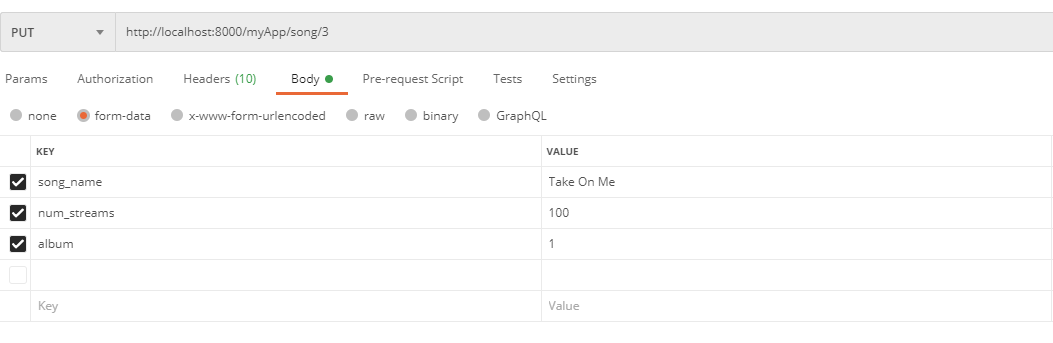

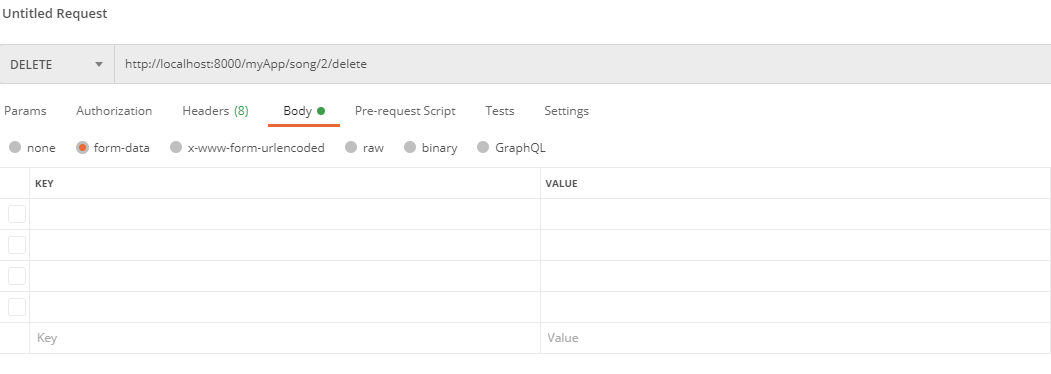

Let's do the same thing for our deleteSong view, but let's delete Taylor's song this time (pk: 2). I'm sure it was no good anyways.

| Request |

|

| Response |

|

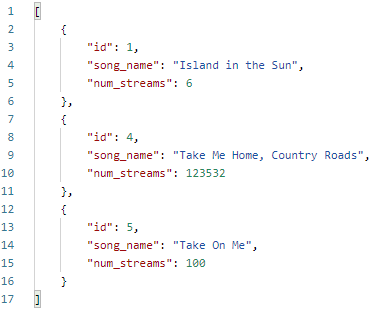

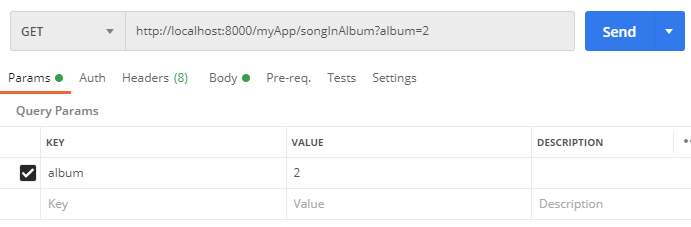

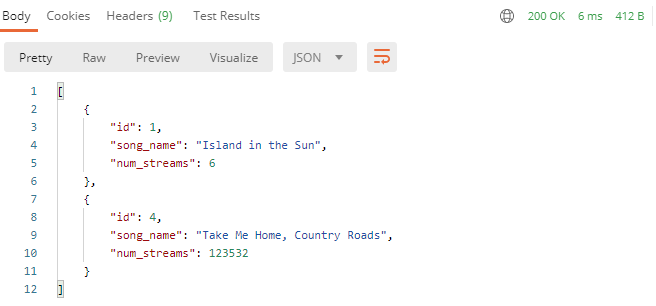

Let's use our GET view to see what's inside the DB now:

**unimportant note: in my zeal to delete taylor's song I had a mishap and accidentally deleted song 3, which I have readded here using a post request. but it's id is now 5 :[

Finally, let's try out that "song with album" route. We'll add it to our urls.py:

# Probably not the best naming convention

path('songInAlbum', views.GetSongInAlbum.as_view(), name='Get song in album'),

Here's what our request will look like. ^^^^^^^^

Here's the response:

Good to know: Query Parameters

Notice how our query params don't have to be specified in urls.py - they are dynamically generated from the URL that we try access (everything that comes after a ? in a url is a query parameter, with keys and values separated by '='. If you had multiple query parameters they would be separated by '&'. Next time you're browsing the web, notice how query parameters are used across the different websites you visit!

It's easy to access query params within Django - see the getSongInAlbum view definition for an example.

Authtokens, users, logins with Django REST Framework

Up to now, we've covered the fundamentals of how to create a database, populate it, and create simple endpoints for creating, updating, and destroying. But what happens when we want our system to be used by real users? How do we store their information and their interactions with our system? There are a few important issues that we'll need to address:

- How do we make sure that users' personal information like their passcodes are being stored in a secure way that protects them from being stolen?

- How do we build a system that users can sign up for and log in to? How do we store info about their session?

- How do we make certain endpoints in our system behave differently depending on the user who is accessing them?

The answer to these questions can be complicated. In order to save your time and energy, we're going to utilize the resources that Django and Django REST Framework provide for us as much as possible instead of developing our own solutions. Not only is this easier, but it's also much more secure- would you trust a system written from scratch by a novice undergrad student with your password and financial information?

How do we store user's personal info?

The answer to this question is usually to use Django's built-in User model. You can read the docs on User models here:

https://docs.djangoproject.com/en/3.1/ref/contrib/auth/

The User model contains common fields that will be used by users, and in your serializers you can define which fields are relevant to your use case.

By default, Django builds the User models for you. You can see them after you runserver and check inside the /admin route.

We can also utilize the User model to build new endpoints in our API, just like we could with any other model. Here's an example:

Models.py

from django.contrib.auth.models import User

…

class UserLikesSong(models.Model):

user = models.ForeignKey(User, on_delete=models.CASCADE)

song = models.ForeignKey(Song, on_delete=models.CASCADE)

Serializers.py

class UserLikesSongSerializer(serializers.ModelSerializer):

class Meta:

model = UserLikesSong

fields = ("id", "user", "song")

#Id of the rel, Id of the user, ID of the song

You can now make endpoints with this just like you would with any other model/serializer. This specific example could be used to track what songs the User likes, like in Spotify.

If you wanted to make a custom User model, you could read more about it here https://simpleisbetterthancomplex.com/tutorial/2016/07/22/how-to-extend-django-user-model.html and do more research, as there are many methods you could use. For basic university usage though, it's 99% of the time going to be faster and easier to roll with the User model they give you out of the box.

If you want to give different categories of users different permissions, see the_ permissions _section (TODO: this won't be done for a while. In the meantime, these links may help: https://www.django-rest-framework.org/api-guide/permissions/ ← Technical overview

https://www.django-rest-framework.org/tutorial/4-authentication-and-permissions/ ← Basic usage example)

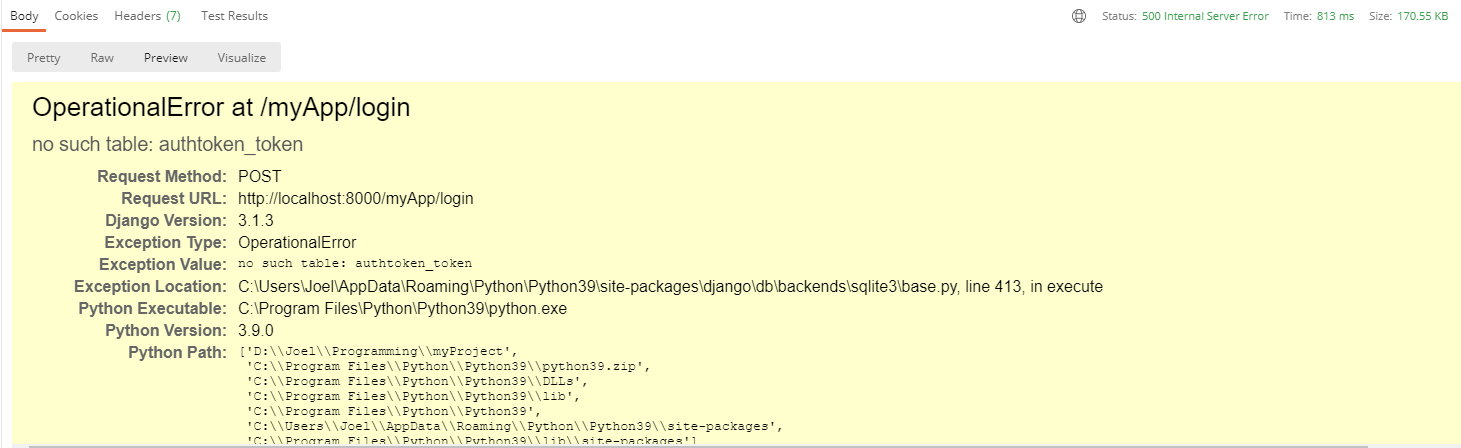

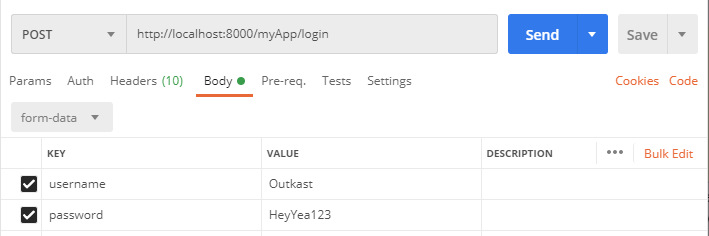

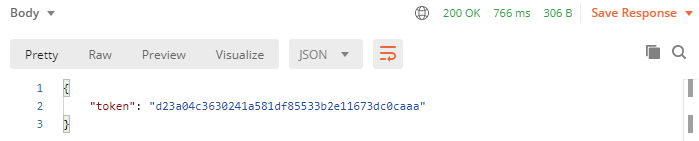

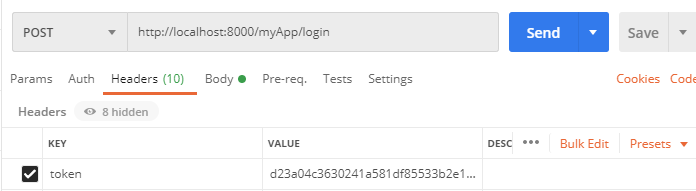

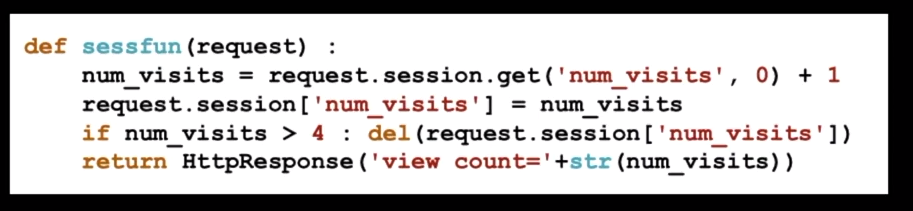

Signup, Login, Sessions: How do we do them?

I highly recommend using Django REST Framework's Authtokens to handle information about user sessions. You can read about authtokens, as well as the other options available, here: https://www.django-rest-framework.org/api-guide/authentication/#tokenauthentication